Posts tagged ‘ethics’

One more re-identification demonstration, and then I’m out

What should we do about re-identification? Back when I started this blog in grad school seven years ago, I subtitled it “The end of anonymous data and what to do about it,” anticipating that I’d work on re-identification demonstrations as well as technical and policy solutions. As it turns out, I’ve looked at the former much more often than the latter. That said, my recent paper A Precautionary Approach to Big Data Privacy with Joanna Huey and Ed Felten tackles the “what to do about it” question head-on. We present a comprehensive set of recommendations for policy makers and practitioners.

One more re-identification demonstration, and then I’m out. Overall, I’ve moved on in terms of my research interests to other topics like web privacy and cryptocurrencies. That said, there’s one fairly significant re-identification demonstration I hope to do some time this year. This is something I started in grad school, obtained encouraging preliminary results on, and then put on the back burner. Stay tuned.

Machine learning and re-identification. I’ve argued that the algorithms used in re-identification turn up everywhere in computer science. I’m still interested in these algorithms from this broader perspective. My recent collaboration on de-anonymizing programmers using coding style is a good example. It uses more sophisticated machine learning than most of my earlier work on re-identification, and the potential impact is more in forensics than in privacy.

Privacy and ethical issues in big data. There’s a new set of thorny challenges in big data — privacy-violating inferences, fairness of machine learning, and ethics in general. I’m collaborating with technology ethics scholar Solon Barocas on these topics. Here’s an abstract we wrote recently, just to give you a flavor of what we’re doing:

How to do machine learning ethically

Every now and then, a story about inference goes viral. You may remember the one about Target advertising to customers who were determined to be pregnant based on their shopping patterns. The public reacts by showing deep discomfort about the power of inference and says it’s a violation of privacy. On the other hand, the company in question protests that there was no wrongdoing — after all, they had only collected innocuous information on customers’ purchases and hadn’t revealed that data to anyone else.

This common pattern reveals a deep disconnect between what people seem to care about when they cry privacy foul and the way the protection of privacy is currently operationalized. The idea that companies shouldn’t make inferences based on data they’ve legally and ethically collected might be disturbing and confusing to a data scientist.

And yet, we argue that doing machine learning ethically means accepting and adhering to boundaries on what’s OK to infer or predict about people, as well as how learning algorithms should be designed. We outline several categories of inference that run afoul of privacy norms. Finally, we explain why ethical considerations sometimes need to be built in at the algorithmic level, rather than being left to whoever is deploying the system. While we identify a number of technical challenges that we don’t quite know how to solve yet, we also provide some guidance that will help practitioners avoid these hazards.

To stay on top of future posts, subscribe to the RSS feed or follow me on Twitter.

Reidentification as Basic Science

This essay originally appeared on the Bill of Health blog as part of a conversation on the law, ethics and science of reidentification demonstrations.

What really drives reidentification researchers? Do we publish these demonstrations to alert individuals to privacy risks? To shame companies? For personal glory? If our goal is to improve privacy, are we doing it in the best way possible?

In this post I’d like to discuss my own motivations as a reidentification researcher, without speaking for anyone else. Certainly I care about improving privacy outcomes, in the sense of making sure that companies, governments and others don’t get away with mathematically unsound promises about the privacy of consumers’ data. But there is a quite different goal I care about at least as much: reidentification algorithms. These algorithms are my primary object of study, and so I see reidentification research partly as basic science.

Let me elaborate on why reidentification algorithms are interesting and important. First, they yield fundamental insights about people — our interests, preferences, behavior, and connections — as reflected in the datasets collected about us. Second, as is the case with most basic science, these algorithms turn out to have a variety of applications other than reidentification, both for good and bad. Let us consider some of these.

First and foremost, reidentification algorithms are directly applicable in digital forensics and intelligence. Analyzing the structure of a terrorist network (say, based on surveillance of movement patterns and meetings) to assign identities to nodes is technically very similar to social network deanonymization. A reidentification researcher that I know who is a U.S. citizen tells me he has been contacted more than once by intelligence agencies to apply his expertise to their data.

Homer et al’s work on identifying individuals in DNA mixtures is another great example of how forensics algorithms are inextricably linked to privacy-infringing applications. In addition to DNA and network structure, writing style and location trails are other attributes that have been utilized both in reidentification and forensics.

It is not a coincidence that the reidentification literature often uses the word “fingerprint” — this body of work has generalized the notion of a fingerprint beyond physical attributes to a variety of other characteristics. Just like physical fingerprints, there are good uses and bad, but regardless, finding generalized fingerprints is a contribution to human knowledge. A fundamental question is how much information (i.e., uniqueness) there is in each of these types of attributes or characteristics. Reidentification research is gradually helping answer this question, but much remains unknown.

It is not only people that are fingerprintable — so are various physical devices. A wonderful set of (unrelated) research papers has shown that many types of devices, objects, and software systems, even supposedly identical ones, are have unique fingerprints: blank paper, digital cameras, RFID tags, scanners and printers, and web browsers, among others. The techniques are similar to reidentification algorithms, and once again straddle security-enhancing and privacy-infringing applications.

Even more generally, reidentification algorithms are classification algorithms for the case when the number of classes is very large. Classification algorithms categorize observed data into one of several classes, i.e., categories. They are at the core of machine learning, but typical machine-learning applications rarely need to consider more than several hundred classes. Thus, reidentification science is helping develop our knowledge of how best to extend classification algorithms as the number of classes increases.

Moving on, research on reidentification and other types of “leakage” of information reveals a problem with the way data-mining contests are run. Most commonly, some elements of a dataset are withheld, and contest participants are required to predict these unknown values. Reidentification allows contestants to bypass the prediction process altogether by simply “looking up” the true values in the original data! For an example and more elaborate explanation, see this post on how my collaborators and I won the Kaggle social network challenge. Demonstrations of information leakage have spurred research on how to design contests without such flaws.

If reidentification can cause leakage and make things messy, it can also clean things up. In a general form, reidentification is about connecting common entities across two different databases. Quite often in real-world datasets there is no unique identifier, or it is missing or erroneous. Just about every programmer who does interesting things with data has dealt with this problem at some point. In the research world, William Winkler of the U.S. Census Bureau has authored a survey of “record linkage”, covering well over a hundred papers. I’m not saying that the high-powered machinery of reidentification is necessary here, but the principles are certainly useful.

In my brief life as an entrepreneur, I utilized just such an algorithm for the back-end of the web application that my co-founders and I built. The task in question was to link a (musical) artist profile from last.fm to the corresponding Wikipedia article based on discography information (linking by name alone fails in any number of interesting ways.) On another occasion, for the theory of computing blog aggregator that I run, I wrote code to link authors of papers uploaded to arXiv to their DBLP profiles based on the list of coauthors.

There is more, but I’ll stop here. The point is that these algorithms are everywhere.

If the algorithms are the key, why perform demonstrations of privacy failures? To put it simply, algorithms can’t be studied in a vacuum; we need concrete cases to test how well they work. But it’s more complicated than that. First, as I mentioned earlier, keeping the privacy conversation intellectually honest is one of my motivations, and these demonstrations help. Second, in the majority of cases, my collaborators and I have chosen to examine pairs of datasets that were already public, and so our work did not uncover the identities of previously anonymous subjects, but merely helped to establish that this could happen in other instances of “anonymized” data sharing.

Third, and I consider this quite unfortunate, reidentification results are taken much more seriously if researchers do uncover identities, which naturally gives us an incentive to do so. I’ve seen this in my own work — the Netflix paper is the most straightforward and arguably the least scientifically interesting reidentification result that I’ve co-authored, and yet it received by far the most attention, all because it was carried out on an actual dataset published by a company rather than demonstrated hypothetically.

My primary focus on the fundamental research aspect of reidentification guides my work in an important way. There are many, many potential targets for reidentification — despite all the research, data holders often (rationally) act like nothing has changed and continue to make data releases with “PII” removed. So which dataset should I pick to work on?

Focusing on the algorithms makes it a lot easier. One of my criteria for picking a reidentification question to work on is that it must lead to a new algorithm. I’m not at all saying that all reidentification researchers should do this, but for me it’s a good way to maximize the impact I can hope for from my research, while minimizing controversies about the privacy of the subjects in the datasets I study.

I hope this post has given you some insight into my goals, motivations, and research outputs, and an appreciation of the fact that there is more to reidentification algorithms than their application to breaching privacy. It will be useful to keep this fact in the back of our minds as we continue the conversation on the ethics of reidentification.

Thanks to Vitaly Shmatikov for reviewing a draft.

To stay on top of future posts, subscribe to the RSS feed or follow me on Twitter or Google+.

In Silicon Valley, Great Power but No Responsibility

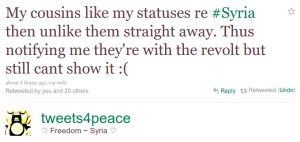

I saw a tweet today that gave me a lot to think about:

.

A rather intricate example of social adaptation to technology. If I understand correctly, the cousins in question are taking advantage of the fact that liking someone’s status/post on Facebook generates a notification for the poster that remains even if the post is immediately unliked. [1]

What’s humbling is that such minor features have the power to affect so many, and so profoundly. What’s scary is that the feature is so fickle. If Facebook starts making updates available through a real-time API, like Google Buzz does, then the ‘like’ will stick around forever on some external site and users will be none the wiser until something goes wrong. Similar things have happened: a woman was fired because sensitive information she put on Twitter and then deleted was cached by an external site. I’ve written about the privacy dangers of making public data “more public”, including the problems of real-time APIs. [2]

As complex and fascinating as the technical issues are, the moral challenges interest me more. We’re at a unique time in history in terms of technologists having so much direct power. There’s just something about the picture of an engineer in Silicon Valley pushing a feature live at the end of a week, and then heading out for some beer, while people halfway around the world wake up and start using the feature and trusting their lives to it. It gives you pause.

This isn’t just about privacy or just about people in oppressed countries. RescueTime estimates that 5.3 million hours were spent worldwide on Google’s Les Paul doodle feature. Was that a net social good? Who is making the call? Google has an insanely rigorous A/B testing process to optimize between 41 shades of blue, but do they have any kind of process in place to decide whether to release a feature that 5.3 million hours—eight lifetimes—are spent on?

For the first time in history, the impact of technology is being felt worldwide and at Internet speed. The magic of automation and ‘scale’ dramatically magnifies effort and thus bestows great power upon developers, but it also comes with the burden of social responsibility. Technologists have always been able to rely on someone else to make the moral decisions. But not anymore—there is no ‘chain of command,’ and the law is far too slow to have anything to say most of the time. Inevitably, engineers have to learn to incorporate social costs and benefits into the decision-making process.

Many people have been raising awareness of this—danah boyd often talks about how tech products make a mess of many things: privacy for one, but social nuances in general. And recently at TEDxSiliconValley, Damon Horowitz argued that technologists need a moral code.

But here’s the thing—and this is probably going to infuriate some of you—I fear that these appeals are falling on deaf ears. Hackers build things because it’s fun; we see ourselves as twiddling bits on our computers, and generally don’t even contemplate, let alone internalize, the far-away consequences of our actions. Privacy is viewed in oversimplified access-control terms and there isn’t even a vocabulary for a lot of the nuances that users expect.

The ignorant are at least teachable, but I often hear a willful disdain for moral issues. Anything that’s technically feasible is seen as fair game and those who raise objections are seen as incompetent outsiders trying to rain on the parade of techno-utopia. The pronouncements of executives like Schmidt and Zuckerberg, not to mention the writings of people like Arrington and Scoble who in many ways define the Valley culture, reflect a tone-deaf thinking and a we-make-the-rules-get-over-it attitude.

Something’s gotta give.

[1] It’s possible that the poster is talking about Twitter, and by ‘like’ they mean ‘favorite’. This makes no difference to the rest of my arguments; if anything it’s stronger because Twitter already has a Firehose.

[2] Potential bugs are another reason that this feature is fickle. As techies might recognize, ensuring that a like doesn’t show up after an item is unliked maps to the problem of update propagation in a distributed database, which the CAP theorem proves is hard. Indeed, Facebook often has glitches of exactly this sort—you might notice it because a comment notification shows up and the comment doesn’t, or vice versa, or different people see different like counts, etc.

[ETA] I see this essay as somewhat complementary to my last one on how information technology enables us to be more private contrasted with the ways in which it also enables us to publicize our lives. There I talked about the role of consumers of technology in determining its direction; this article is about the role of the creators.

[Edit 2] Changed the British spelling ‘wilful’ to American.

Thanks to Jonathan Mayer for comments on a draft.

To stay on top of future posts, subscribe to the RSS feed or follow me on Twitter.

Is Making Public Data “More Public” a Privacy Violation?

What on earth does more public mean? Technologists draw a simple distinction between data that is public and data that is not. Under this view, the notion of making data more public is meaningless. But common sense tells us otherwise: it’s hard to explain the opposition to public surveillance if you assume that it’s OK to collect, store and use “public” information indiscriminately.

There are entire philosophical theories devoted to understanding what one can and cannot do with public data in different contexts. Recently, danah boyd argued in her SXSW keynote in support of “privacy through obscurity” and how technology is destroying this comfort. According to boyd, most public data is “quasi-public” and technologists don’t have the right to “publicize” it.

Some examples. One can debate the point in the abstract, but there is no question that companies and individuals have repeatedly been bitten when applying the “it’s already public” rule. Let’s look at some examples (the list and the discussion is largely concerned with data on the web).

- The availability of the California Birth Index on the web caused considerable consternation about a decade ago, despite the fact that birth records in the state are public and anyone’s birth record can be obtained through official channels albeit in a cumbersome manner.

- IRSeek planned to launch a search engine for IRC in 2007 by monitoring and indexing public channels (chatrooms). There was a predictable privacy outcry and they were forced to shut down.

- The Infochimps guys crawled the Twitter graph back in 2008 and posted it on their site. Twitter forced them to take the dataset down.

- The story was repeated with Pete Warden and Facebook; this time it was nastier and involved the threat of a lawsuit.

- MySpace recently started selling user data in bulk on Infochimps. As MySpace has pointed out, the data is already public, but privacy concerns have nevertheless been raised.

- One reason for the backlash against Google Buzz was auto-connect: it connected your activity on Google Reader and other services and streamed it to your friends. Your Google Reader activities were already public, but Buzz took it further by broadcasting it.

- Spokeo is facing similar criticism. As Snopes explains, “Spokeo displays listings that sometimes contain more personal information than many people are comfortable having made publicly accessible through a single, easy-to-use search site.”

The latter four examples are all from the last couple of months. For some reason the issue has suddenly started cropping up all the time. The current situation is bad for everyone: data trustees and data analysts have no clear guidelines in place, and users/consumers are in a position of constantly having to fight back against a loss of privacy. We need to figure out some ground rules to decide what uses of public data on the web are acceptable.

Why not “none?” I don’t agree with a blanket argument against using data for purposes other than originally intended, for many reasons. The first is that users’ privacy expectations, when they go beyond the public/private dichotomy, are generally poorly articulated, frequently unreasonable and occasionally self-contradictory. (An unfortunate but inevitable consequence of the complexity of technology.) The second reason is that these complex privacy rules, even if they can be figured out, often need to be communicated to the machine.

The third reason is the “greater good.” I’ve opposed that line of reasoning when used to justify reneging on an explicit privacy promise. But when it comes to a promise that was never actually made but merely intuitively understood (or mis-understood) by users, I think the question is different, and my stance is softer. Privacy needs to be weighed against the benefit to society from “publicizing” data — disseminating, aggregating and analyzing it.

In the next article of this series, I will give a rigorous technical characterization of what constitutes publicizing data. My hope is that this will go a long way towards determining what is and is not a violation of privacy. In the meanwhile, I look forward to hearing different opinions.

Thanks to Pete Warden and Vimal Jeyakumar for comments on a draft.

To stay on top of future posts, subscribe to the RSS feed or follow me on Twitter.

Is Anonymity Research Ethical?

A researcher who is working on writing style analysis (“stylometry”), after reading my post on related de-anonymization techniques, wonders what the positive impact of such research could be, given my statement that the malicious uses of the technology are far greater than the beneficial ones. He says:

Sometimes when I’m thinking of an interesting research topic it’s hard to forget the Patton Oswalt line “Hey, we made cancer airborne and contagious! You’re welcome! We’re science: we’re all about coulda, not shoulda.”

This was my answer:

To me, generic research on algorithms always has a positive impact (if you’re breaking a specific website or system, that’s a different story; a bioweapon is a whole different category.) I do not recognize a moral question here, and therefore it does not affect what I choose to work on.

My belief that the research will have a positive impact is not at odds with my belief that the uses of the technology are predominantly evil. In fact, the two are positively correlated. If we’re talking about web search technology, if academics don’t invent it, then (benevolent) companies will. But if we’re talking about de-anonymization technology, if we don’t do it, then malevolent entities will invent it (if they haven’t already), and of course, keep it to themselves. It comes down to a choice between a world where everyone has access to de-anonymization techniques, and hopefully defenses against it, versus one in which only the bad guys do. I think it’s pretty clear which world most people will choose to live in.

I realize I lean toward the “coulda” side of the question of whether Science is—or should be—amoral. Someone like Prof. Benjamin Kuipers here at UT seems to be close to the other end of the spectrum: he won’t take any DARPA money.

Part of the problem with allowing morality to affect the direction of science is that it is often arbitrary. The Patton Oswalt quote above is a perfect example: he apparently said that in response to news of science enabling a 63 year old woman to give birth. The notion that something is wrong simply because it is not “natural” is one that I find most repugnant. If the freedom of a 63 year old woman to give birth is not an important issue to you, let me note that more serious issues such as stem cell research, that could save lives, fall under the same category.

Going back to anonymity, it is interesting that tools like Tor face much criticism, but for enabling the anonymity of “bad” people rather than breaking the anonymity of “good” people. Who is to be the arbiter of the line between good and bad? I share the opinion of most techies that Tor is a wonderful thing for the world to have.

There are many sides to this issue and many possible views. I’d love to hear your thoughts.