In Silicon Valley, Great Power but No Responsibility

June 11, 2011 at 7:33 am 13 comments

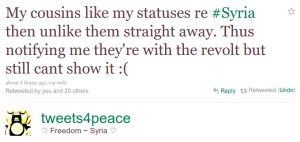

I saw a tweet today that gave me a lot to think about:

.

A rather intricate example of social adaptation to technology. If I understand correctly, the cousins in question are taking advantage of the fact that liking someone’s status/post on Facebook generates a notification for the poster that remains even if the post is immediately unliked. [1]

What’s humbling is that such minor features have the power to affect so many, and so profoundly. What’s scary is that the feature is so fickle. If Facebook starts making updates available through a real-time API, like Google Buzz does, then the ‘like’ will stick around forever on some external site and users will be none the wiser until something goes wrong. Similar things have happened: a woman was fired because sensitive information she put on Twitter and then deleted was cached by an external site. I’ve written about the privacy dangers of making public data “more public”, including the problems of real-time APIs. [2]

As complex and fascinating as the technical issues are, the moral challenges interest me more. We’re at a unique time in history in terms of technologists having so much direct power. There’s just something about the picture of an engineer in Silicon Valley pushing a feature live at the end of a week, and then heading out for some beer, while people halfway around the world wake up and start using the feature and trusting their lives to it. It gives you pause.

This isn’t just about privacy or just about people in oppressed countries. RescueTime estimates that 5.3 million hours were spent worldwide on Google’s Les Paul doodle feature. Was that a net social good? Who is making the call? Google has an insanely rigorous A/B testing process to optimize between 41 shades of blue, but do they have any kind of process in place to decide whether to release a feature that 5.3 million hours—eight lifetimes—are spent on?

For the first time in history, the impact of technology is being felt worldwide and at Internet speed. The magic of automation and ‘scale’ dramatically magnifies effort and thus bestows great power upon developers, but it also comes with the burden of social responsibility. Technologists have always been able to rely on someone else to make the moral decisions. But not anymore—there is no ‘chain of command,’ and the law is far too slow to have anything to say most of the time. Inevitably, engineers have to learn to incorporate social costs and benefits into the decision-making process.

Many people have been raising awareness of this—danah boyd often talks about how tech products make a mess of many things: privacy for one, but social nuances in general. And recently at TEDxSiliconValley, Damon Horowitz argued that technologists need a moral code.

But here’s the thing—and this is probably going to infuriate some of you—I fear that these appeals are falling on deaf ears. Hackers build things because it’s fun; we see ourselves as twiddling bits on our computers, and generally don’t even contemplate, let alone internalize, the far-away consequences of our actions. Privacy is viewed in oversimplified access-control terms and there isn’t even a vocabulary for a lot of the nuances that users expect.

The ignorant are at least teachable, but I often hear a willful disdain for moral issues. Anything that’s technically feasible is seen as fair game and those who raise objections are seen as incompetent outsiders trying to rain on the parade of techno-utopia. The pronouncements of executives like Schmidt and Zuckerberg, not to mention the writings of people like Arrington and Scoble who in many ways define the Valley culture, reflect a tone-deaf thinking and a we-make-the-rules-get-over-it attitude.

Something’s gotta give.

[1] It’s possible that the poster is talking about Twitter, and by ‘like’ they mean ‘favorite’. This makes no difference to the rest of my arguments; if anything it’s stronger because Twitter already has a Firehose.

[2] Potential bugs are another reason that this feature is fickle. As techies might recognize, ensuring that a like doesn’t show up after an item is unliked maps to the problem of update propagation in a distributed database, which the CAP theorem proves is hard. Indeed, Facebook often has glitches of exactly this sort—you might notice it because a comment notification shows up and the comment doesn’t, or vice versa, or different people see different like counts, etc.

[ETA] I see this essay as somewhat complementary to my last one on how information technology enables us to be more private contrasted with the ways in which it also enables us to publicize our lives. There I talked about the role of consumers of technology in determining its direction; this article is about the role of the creators.

[Edit 2] Changed the British spelling ‘wilful’ to American.

Thanks to Jonathan Mayer for comments on a draft.

To stay on top of future posts, subscribe to the RSS feed or follow me on Twitter.

Entry filed under: Uncategorized. Tags: ethics, privacy, technology.

13 Comments Add your own

Leave a comment

Trackback this post | Subscribe to the comments via RSS Feed

1. tedhoward | June 11, 2011 at 2:58 pm

tedhoward | June 11, 2011 at 2:58 pm

The ‘engineer-run’ mentality of many current firms certainly creates non-technical problems. But it’s not just a morality issue. There is a pervasive and willful lawlessness here that I haven’t experienced elsewhere (4 major cities, 2 countries). It pervades local society and the VC’s actively encourage it.

What appear to be speed traps on 280 must just be cops sleeping because they never cared that I drove 90-100 MPH. At one job, I saw someone copying art, including the logo, from a major national brand. I said “Wow. We have a marketing/IP deal with ____?” The response was “No. We just copy their stuff.” YouTube *knew* they were profiting from piracy. Google bought them knowing that YouTube was acting illegally. Oracle’s Java lawsuit against Google has turned up source code files that were blatantly copied in violation of the code licenses. Google started copying authors books without asking anyone. Examples of knowingly-illegal activity are trivial to find in Silicon Valley/SF.

Lawlessness is rampant in the Bay area.

2. tedhoward | June 11, 2011 at 3:03 pm

tedhoward | June 11, 2011 at 3:03 pm

I’m only adding this because it is literally the next news/blog story I saw: http://www.betabeat.com/2011/06/10/google-app-store-now-offering-way-to-cheat-wsj-paywall/. Many technologists would not see anything wrong with this app. Most humans, however, understand that the purpose of the app is to steal something of value.

3. Neil Kandalgaonkar | June 11, 2011 at 5:13 pm

Neil Kandalgaonkar | June 11, 2011 at 5:13 pm

@tedhoward: Most of your examples relate to copyright. While there are technical violations of the law involved, it is at least debatable whether these constitute moral questions. After all, in reality, sharing ideas greatly increases the general welfare, and under our current legal regime it tends to have little effect on creators, and merely reduce the expected returns for rentiers.

Also, Silicon Valley is hardly the epicenter of copyright violation. In places like China, copying an entire business from product to logo is routine. Even for entire websites. Many would be stunned at the idea that the West considers this immoral.

4. Neil Kandalgaonkar | June 11, 2011 at 5:36 pm

Neil Kandalgaonkar | June 11, 2011 at 5:36 pm

In any case, let’s not get distracted by copyright issues — I think the blog post was about more substantial harms, to personal privacy especially. It is clear that some of the Valley’s biggest success stories have a very cavalier attitude there. Facebook’s motto is “move fast and break things”. Facebook has shown that they will do anything and everything to usher us into the future where advertisers know more about us than our best friends.

In Canada, there is a tradition of giving newly graduated engineers an iron ring. It’s made from the remains of a bridge that collapsed. Something to think about as you design the next building or vehicle. Would that we had a similar ethical culture.

5. Arvind Narayanan | June 11, 2011 at 6:34 pm

Arvind Narayanan | June 11, 2011 at 6:34 pm

The bit about the ring is fascinating. Thanks for sharing.

6. Brian Slesinsky | June 12, 2011 at 5:45 pm

Brian Slesinsky | June 12, 2011 at 5:45 pm

The implemention of a “like” button does have consequences and we do have a responsibility for that. However, I think it has to be put into perspective – it hardly compares to the amount of power over people’s lives held by the police, the courts, the health industry, or the financial industry. While software engineers aren’t used to holding power, I would say we’re a relatively ethical bunch compared to, say, stockbrokers or bankers.

7. Arvind Narayanan | June 12, 2011 at 6:01 pm

Arvind Narayanan | June 12, 2011 at 6:01 pm

Brian,

Note that I’m not calling software developers unethical; I’m saying that we shirk ethical questions, which is different.

The comparison between developers and the police/courts etc. isn’t so simple because of scale. Who holds greater power—one who has absolute power over a single person, or one who releases a feature that can significantly empower or endanger the lives of a million?

Our brains are ill-equipped to consider questions like these; indeed, that’s probably why the Like button feels like a frivolous thing to be talking about, even after looking at an example of what it’s being used for. But that’s all the more reason why we need some sort of moral calculus.

8. Chris | June 14, 2011 at 1:56 am

Chris | June 14, 2011 at 1:56 am

Actually, I think that to shirk ethical questions is to be unethical.

That said, who holds greater power—one who has absolute power over a Like button, or one who destroys an economy that can significantly empower or endanger the lives of a million?

Techies are definitely shirking ethical questions, but they have a long way to go before they can do as much damage as bankers, stockbrokers, the government, …

9. Benlog » with great power… | June 13, 2011 at 2:31 am

[…] to read it, because it usually packs a particularly high signal to noise ratio. His latest post In Silicon Valley, Great Power but No Responsibility, is awesome: We’re at a unique time in history in terms of technologists having so much direct […]

10. Amar | June 13, 2011 at 2:51 pm

Amar | June 13, 2011 at 2:51 pm

Berkman recently had a symposium that addressed a few of these questions — maybe of interest — http://www.hyperpublic.org/schedule/

11. anonymouse | June 14, 2011 at 8:58 pm

anonymouse | June 14, 2011 at 8:58 pm

12. Arvind Narayanan | June 14, 2011 at 11:07 pm

Arvind Narayanan | June 14, 2011 at 11:07 pm

I assume that’s from Jurassic Park. The question of ethicality of inventions with destructive potential is very old; that’s not quite what I wanted to discuss in this essay. Internet technology is different from nukes or Jurassic Park in at least three ways: 1. It’s obvious that broadly, the social impact has been hugely positive. No one is arguing that we should uninvent anything. 2. The inventors now have their hands on the switch, so the old social processes for decisionmaking don’t quite apply anymore. 3. The scale of impact is higher than ever before.

13. Colin Watson | July 9, 2011 at 2:57 pm

Colin Watson | July 9, 2011 at 2:57 pm

My experience – and I’ll concede that this is probably skewed because I work at a free software company, and even before that I was working alongside free software types – is that the engineers in a company are the ones who spend most time thinking about ethical issues. Without evidence, I’d guess that this may be because the process of making a buck is often (not always, of course!) in tension with ethics, and good software companies separate at least some of their engineers from the more commercial side of their operations so that the engineers can focus on engineering. This means that the engineers are often the people who end up saying “hang on a minute, we need to think about this”.

I think it’s important here to separate the thinking of people running technology companies from the engineers on the ground writing the code. It’s far from obvious that any given feature you see is driven by the latter rather than the former.