Posts tagged ‘anonymity’

Good and bad reasons for anonymizing data

Ed Felten and I recently wrote a response to a poorly reasoned defense of data anonymization. This doesn’t mean, however, that there’s never a place for anonymization. Here’s my personal view on some good and bad reasons for anonymizing data before sharing it.

Good: We’re using anonymization to keep honest people honest. We’re only providing the data to insiders (employees) or semi-insiders (research collaborators), and we want to help them resist the temptation to peep.

Probably good: We’re sharing data only with a limited set of partners. These partners have a reputation to protect; they have also signed legal agreements that specify acceptable uses, retention periods, and audits.

Possibly good: We de-identified the data at a big cost in utility — for example, by making high-dimensional data low-dimensional via “vertical partitioning” — but it still enables some useful data analysis. (There are significant unexplored research questions here, and technically sound privacy guarantees may be possible.)

Reasonable: The data needed to be released no matter what; techniques like differential privacy didn’t produce useful results on our dataset. We released de-identified data and decided to hope for the best.

Reasonable: The auxiliary data needed for de-anonymization doesn’t currently exist publicly and/or on a large scale. We’re acting on the assumption that it won’t materialize in a relevant time-frame and are willing to accept the risk that we’re wrong.

Ethically dubious: The privacy harm to individuals is outweighed by the greater good to society. Related: de-anonymization is not as bad as many other privacy risks that consumers face.

Sometimes plausible: The marginal benefit of de-anonymization (compared to simply using the auxiliary dataset for marketing or whatever purpose) is so low that even the small cost of skilled effort is a sufficient deterrent. Adversaries will prefer other means of acquiring equivalent data — through purchase, if they are lawful, or hacking, if they’re not.[*]

Bad: Since there aren’t many reports of de-anonymization except research demonstrations, it’s safe to assume it isn’t happening.

It’s surprising how often this argument is advanced considering that it’s a complete non-sequitur: malfeasors who de-anonymize are obviously not going to brag about it. The next argument is a self-interested version takes this fact into account.

Dangerously rational: There won’t be a PR fallout from releasing anonymized data because researchers no longer have the incentive for de-anonymization demonstrations, whereas if malfeasors do it they won’t publicize it (elaborated here).

Bad: The expertise needed for de-anonymization is such a rare skill that it’s not a serious threat (addressed here).

Bad: We simulated some attacks and estimated that only 1% of records are at risk of being de-anonymized. (Completely unscientific; addressed here.)

Qualitative risk assessment is valuable; quantitative methods can be a useful heuristic to compare different choices of anonymization parameters if one has already decided to release anonymized data for other reasons, but can’t be used as a justification of the decision.

[*] This is my restatement of one of Yakowitz’s arguments in Tragedy of the Data Commons.

To stay on top of future posts, subscribe to the RSS feed or follow me on Twitter.

New Developments in Deanonymization

This post is a roundup of developments in deanonymization in the last few months. Let’s start with two stories relating to how a malicious website can silently discover the identity of a visitor, which is an insidious type of privacy breach that I’ve written about quite a bit (1, 2, 3, 4, 5, 6).

Firefox bug exposed your identity. The first is a vulnerability resulting from a Firefox bug in the implementation of functions like exec and test. The bug allows a website to learn the URL of an embedded iframe from some other domain. How can this lead to uncovering the visitor’s identity? Because twitter.com/lists redirects to twitter.com/<username>/lists. This allows a malicious website to open a hidden iframe pointing to twitter.com/lists, query the URL after redirection, and learn the visitor’s Twitter handle (if they are logged in). [1,2]

This is very similar to a previous bug in Firefox that led to the same type of vulnerability. The URL redirect that was exploited there was google.com/profiles/me → user-specific URL. It would be interesting to find and document all such generic-URL → user-specific-URL redirects in major websites. I have a feeling this won’t be the last time such redirection will be exploited.

Visitor deanonymization in the wild. The second story is an example of visitor deanonymization happening in the wild. It appears that the technique utilizes a tracking cookie from a third-party domain to which the visitor previously gave their email and other info., in other words, #3 in my five-fold categorization of ways in which identity can be attached to browsing logs.

I don’t consider this instance to be particularly significant — I’m sure there are other implementations in the wild — and it’s not technically novel, but this is the first time as far as I know that it’s gotten significant attention from the public, even if only in tech circles. I see this as a first step in a feedback loop of changing expectations about online anonymity emboldening more sites to deanonymize visitors, thus further lowering the expectation of privacy.

Deanonymization of mobility traces. Let’s move on to the more traditional scenario of deanonymization of a dataset by combining it with an auxiliary, public dataset which has users’ identities. Srivatsa and Hicks have a new paper with demonstrations of deanonymization of mobility traces, i.e., logs of users’ locations over time. They use public social networks as auxiliary information, based on the insight that pairs of people who are friends are more likely to meet with each other physically. The deanonymization of Bluetooth contact traces of attendees of a conference based on their DBLP co-authorship graph is cute.

This paper adds to the growing body of evidence that anonymization of location traces can be reversed, even if the data is obfuscated by introducing errors (noise).

So many datasets, so little time. Speaking of mobility traces, Jason Baldridge points me to a dataset containing mobility traces (among other things) of 5 million “anonymous” users in the Ivory Coast recently released by telecom operator Orange. A 250-word research proposal is required to get access to the data, which is much better from a privacy perspective than a 1-click download. It introduces some accountability without making it too onerous to get the data.

In general, the incentive for computer science researchers to perform practical demonstrations of deanonymization has diminished drastically. Our goal has always been to showcase new techniques and improve our understanding of what’s possible, and not to name and shame. Even if the Orange dataset were more easily downloadable, I would think that the incentive for deanonymization researchers would be low, now that the Srivatsa and Hicks paper exists and we know for sure that mobility traces can be deanonymized, even though the experiments in the paper are on a far smaller scale.

Head in the sand: rational?! I gave a talk at a privacy workshop recently taking a look back at how companies have reacted to deanonymization research. My main point was that there’s a split between the take-your-data-and-go-home approach (not releasing data because of privacy concerns) and the head-in-the-sand approach (pretending the problem doesn’t exist). Unfortunately but perhaps unsurprisingly, there has been very little willingness to take a middle ground, engaging with data privacy researchers and trying to adopt technically sophisticated solutions.

Interestingly, head-in-the-sand might be rational from companies’ point of view. On the one hand, researchers don’t have the incentive for deanonymization anymore. On the other hand, if malicious entities do it, naturally they won’t talk about it in public, so there will be no PR fallout. Regulators have not been very aggressive in investigating anonymized data releases in the absence of a public outcry, so that may be a negligible risk.

Some have questioned whether deanonymization in the wild is actually happening. I think it’s a bit silly to assume that it isn’t, given the economic incentives. Of course, I can’t prove this and probably never can. No company doing it will publicly talk about it, and the privacy harms are so indirect that tying them to a specific data release is next to impossible. I can only offer anecdotes to explain my position: I have been approached multiple times by organizations who wanted me to deanonymize a database they’d acquired, and I’ve had friends in different industries mention casually that what they do on a daily basis to combine different databases together is essentially deanonymization.

[1] For a discussion of why a social network profile is essentially equivalent to an identity, see here and the epilog here.

[2] Mozilla pulled Firefox 16 as a result and quickly fixed the bug.

To stay on top of future posts, subscribe to the RSS feed or follow me on Twitter or Google+.

Is Writing Style Sufficient to Deanonymize Material Posted Online?

I have a new paper appearing at IEEE S&P with Hristo Paskov, Neil Gong, John Bethencourt, Emil Stefanov, Richard Shin and Dawn Song on Internet-scale authorship identification based on stylometry, i.e., analysis of writing style. Stylometric identification exploits the fact that we all have a ‘fingerprint’ based on our stylistic choices and idiosyncrasies with the written word. To quote from my previous post speculating on the possibility of Internet-scale authorship identification:

Consider two words that are nearly interchangeable, say ‘since’ and ‘because’. Different people use the two words in a differing proportion. By comparing the relative frequency of the two words, you get a little bit of information about a person, typically under 1 bit. But by putting together enough of these ‘markers’, you can construct a profile.

The basic idea that people have distinctive writing styles is very well-known and well-understood, and there is an extremely long line of research on this topic. This research began in modern form in the early 1960s when statisticians Mosteller and Wallace determined the authorship of the disputed Federalist papers, and were featured in TIME magazine. It is never easy to make a significant contribution in a heavily studied area. No surprise, then, that my initial blog post was written about three years ago, and the Stanford-Berkeley collaboration began in earnest over two years ago.

Impact. So what exactly did we achieve? Our research has dramatically increased the number of authors that can be distinguished using writing-style analysis: from about 300 to 100,000. More importantly, the accuracy of our algorithms drops off gently as the number of authors increases, so we can be confident that they will continue to perform well as we scale the problem even further. Our work is therefore the first time that stylometry has been shown to have to have serious implications for online anonymity.[1]

Anonymity and free speech have been intertwined throughout history. For example, anonymous discourse was essential to the debates that gave birth to the United States Constitution. Yet a right to anonymity is meaningless if an anonymous author’s identity can be unmasked by adversaries. While there have been many attempts to legally force service providers and other intermediaries to reveal the identity of anonymous users, courts have generally upheld the right to anonymity. But what if authors can be identified based on nothing but a comparison of the content they publish to other web content they have previously authored?

Experiments. Our experimental methodology is set up to directly address this question. Our primary data source was the ICWSM 2009 Spinn3r Blog Dataset, a large collection of blog posts made available to researchers by Spinn3r.com, a provider of blog-related commercial data feeds. To test the identifiability of an author, we remove a random k (typically 3) posts from the corresponding blog and treat it as if those posts are anonymous, and apply our algorithm to try to determine which blog it came from. In these experiments, the labeled (identified) and unlabled (anonymous) texts are drawn from the same context. We call this post-to-blog matching.

In some applications of stylometric authorship recognition, the context for the identified and anonymous text might be the same. This was the case in the famous study of the federalist papers — each author hid his name from some of his papers, but wrote about the same topic. In the blogging scenario, an author might decide to selectively distribute a few particularly sensitive posts anonymously through a different channel. But in other cases, the unlabeled text might be political speech, whereas the only available labeled text by the same author might be a cooking blog, i.e., the labeled and unlabeled text might come from different contexts. Context encompasses much more than topic: the tone might be formal or informal; the author might be in a different mental state (e.g., more emotional) in one context versus the other, etc.

We feel that it is crucial for authorship recognition techniques to be validated in a cross-context setting. Previous work has fallen short in this regard because of the difficulty of finding a suitable dataset. We were able to obtain about 2,000 pairs (and a few triples, etc.) of blogs, each pair written by the same author, by looking at a dataset of 3.5 million Google profiles and searching for users who listed more than one blog in the ‘websites’ field.[2] We are thankful to Daniele Perito for sharing this dataset. We added these blogs to the Spinn3r blog dataset to bring the total to 100,000. Using this data, we performed experiments as follows: remove one of a pair of blogs written by the same author, and use it as unlabeled text. The goal is to find the other blog written by the same author. We call this blog-to-blog matching. Note that although the number of blog pairs is only a few thousand, we match each anonymous blog against all 99,999 other blogs.

Results. Our baseline result is that in the post-to-blog experiments, the author was correctly identified 20% of the time. This means that when our algorithm uses three anonymously published blog posts to rank the possible authors in descending order of probability, the top guess is correct 20% of the time.

But it gets better from there. In 35% of cases, the correct author is one of the top 20 guesses. Why does this matter? Because in practice, algorithmic analysis probably won’t be the only step in authorship recognition, and will instead be used to produce a shortlist for further investigation. A manual examination may incorporate several characteristics that the automated analysis does not, such as choice of topic (our algorithms are scrupulously “topic-free”). Location is another signal that can be used: for example, if we were trying to identify the author of the once-anonymous blog Washingtonienne we’d know that she almost certainly resides in or around Washington, D.C. Alternately, a powerful adversary such as law enforcement may require Blogger, WordPress, or another popular blog host to reveal the login times of the top suspects, which could be correlated with the timing of posts on the anonymous blog to confirm a match.

We can also improve the accuracy significantly over the baseline of 20% for authors for whom we have more than an average number of labeled or unlabeled blog posts. For example, with 40–50 labeled posts to work with (the average is 20 posts per author), the accuracy goes up to 30–35%.

An important capability is confidence estimation, i.e., modifying the algorithm to also output a score reflecting its degree of confidence in the prediction. We measure the efficacy of confidence estimation via the standard machine-learning metrics of precision and recall. We find that we can improve precision from 20% to over 80% with only a halving of recall. In plain English, what these numbers mean is: the algorithm does not always attempt to identify an author, but when it does, it finds the right author 80% of the time. Overall, it identifies 10% (half of 20%) of authors correctly, i.e., 10,000 out of the 100,000 authors in our dataset. Strong as these numbers are, it is important to keep in mind that in a real-life deanonymization attack on a specific target, it is likely that confidence can be greatly improved through methods discussed above — topic, manual inspection, etc.

We confirmed that our techniques work in a cross-context setting (i.e., blog-to-blog experiments), although the accuracy is lower (~12%). Confidence estimation works really well in this setting as well and boosts accuracy to over 50% with a halving of recall. Finally, we also manually verified that in cross-context matching we find pairs of blogs that are hard for humans to match based on topic or writing style; we describe three such pairs in an appendix to the paper. For detailed graphs as well as a variety of other experimental results, see the paper.

We see our results as establishing early lower bounds on the efficacy of large-scale stylometric authorship recognition. Having cracked the scale barrier, we expect accuracy improvements to come easier in the future. In particular, we report experiments in the paper showing that a combination of two very different classifiers works better than either, but there is a lot more mileage to squeeze from this approach, given that ensembles of classifiers are known to work well for most machine-learning problems. Also, there is much work to be done in terms of analyzing which aspects of writing style are preserved across contexts, and using this understanding to improve accuracy in that setting.

Techniques. Now let’s look in more detail at the techniques I’ve hinted at above. The author identification task proceeds in two steps: feature extraction and classification. In the feature extraction stage, we reduce each blog post to a sequence of about 1,200 numerical features (a “feature vector”) that acts as a fingerprint. These features fall into various lexical and grammatical categories. Two example features: the frequency of uppercase words, the number of words that occur exactly once in the text. While we mostly used the same set of features that the authors of the Writeprints paper did, we also came up with a new set of features that involved analyzing the grammatical parse trees of sentences.

An important component of feature extraction is to ensure that our analysis was purely stylistic. We do this in two ways: first, we preprocess the blog posts to filter out signatures, markup, or anything that might not be directly entered by a human. Second, we restrict our features to those that bear little resemblance to the topic of discussion. In particular, our word-based features are limited to stylistic “function words” that we list in an appendix to the paper.

In the classification stage, we algorithmically “learn” a characterization of each author (from the set of feature vectors corresponding to the posts written by that author). Given a set of feature vectors from an unknown author, we use the learned characterizations to decide which author it most likely corresponds to. For example, viewing each feature vector as a point in a high-dimensional space, the learning algorithm might try to find a “hyperplane” that separates the points corresponding to one author from those of every other author, and the decision algorithm might determine, given a set of hyperplanes corresponding to each known author, which hyperplane best separates the unknown author from the rest.

We made several innovations that allowed us to achieve the accuracy levels that we did. First, contrary to some previous authors who hypothesized that only relatively straightforward “lazy” classifiers work for this type of problem, we were able to avoid various pitfalls and use more high-powered machinery. Second, we developed new techniques for confidence estimation, including a measure very similar to “eccentricity” used in the Netflix paper. Third, we developed techniques to improve the performance (speed) of our classifiers, detailed in the paper. This is a research contribution by itself, but it also enabled us to rapidly iterate the development of our algorithms and optimize them.

In an earlier article, I noted that we don’t yet have as rigorous an understanding of deanonymization algorithms as we would like. I see this paper as a significant step in that direction. In my series on fingerprinting, I pointed out that in numerous domains, researchers have considered classification/deanonymization problems with tens of classes, with implications for forensics and security-enhancing applications, but that to explore the privacy-infringing/surveillance applications the methods need to be tweaked to be able to deal with a much larger number of classes. Our work shows how to do that, and we believe that insights from our paper will be generally applicable to numerous problems in the privacy space.

Concluding thoughts. We’ve thrown open the doors for the study of writing-style based deanonymization that can be carried out on an Internet-wide scale, and our research demonstrates that the threat is already real. We believe that our techniques are valuable by themselves as well.

The good news for authors who would like to protect themselves against deanonymization, it appears that manually changing one’s style is enough to throw off these attacks. Developing fully automated methods to hide traces of one’s writing style remains a challenge. For now, few people are aware of the existence of these attacks and defenses; all the sensitive text that has already been anonymously written is also at risk of deanonymization.

[1] A team from Israel have studied authorship recognition with 10,000 authors. While this is interesting and impressive work, and bears some similarities with ours, they do not restrict themselves to stylistic analysis, and therefore the method is comparatively limited in scope. Incidentally, they have been in the news recently for some related work.

[2] Although the fraction of users who listed even a single blog in their Google profile was small, there were more than 2,000 users who listed multiple. We did not use the full number that was available.

To stay on top of future posts, subscribe to the RSS feed or follow me on Google+.

No Two Digital Cameras Are the Same: Fingerprinting Via Sensor Noise

The previous article looked at how pieces of blank paper can be uniquely identified. This article continues the fingerprinting theme to another domain, digital cameras, and ends by speculating on the possibility of applying the technique on an Internet-wide scale.

For various kinds of devices like digital cameras and RFID chips, even supposedly identical units that come out of a manufacturing plant behave slightly differently in characteristic ways, and can therefore be distinguished based on their output or behavior. How could this be? The unifying principle is this:

Digital camera identification belongs to a class of techniques that exploits ‘pattern noise’ in the ‘sensor arrays’ that capture images. The same techniques can be used to fingerprint a scanner by analyzing pixel-level patterns in the images scanned by it, but that’ll be the focus of a later article.

A long-exposure dark frame [source]. Click image to see full size. Three ‘hot pixels’ and some other sensor noise can be seen.

A photo taken in the absence of any light doesn’t look completely black; a variety of factors introduce noise. There is random noise that varies in every image, but there is also ‘pattern noise’ due to inherent structural defects or irregularities in the physical sensor array. The key property of the latter kind of noise is that it manifests the same way every image taken by the camera.[1] Thus, the total noise vector produced by a camera is not identical between images, nor is it completely independent.

Nevertheless, separating the pattern noise from random noise and the image itself — after all, a good camera will seek to minimize the strength or ‘power’ of the noise in relation to the image — is a very difficult task, and is the primary technical challenge that camera fingerprinting techniques must address.

Security vs. privacy. A quick note about the applications of camera fingerprinting. We saw in the previous article that there are security-enhancing and privacy-infringing applications of document fingerprinting. In fact, this is almost always the case with fingerprinting techniques. [2]

Camera fingerprinting can be used on the one hand for detecting forgeries (e.g., photoshopped images), and to aid criminal investigations by determining who (or rather, which camera) might have taken a picture. On the other hand, it could potentially also be used for unmasking individuals who wish to disseminate photos anonymously online.

Sadly, most papers studying fingerprinting study only the former type of application, which is why we’ll have to speculate a bit on the privacy impact, even though the underlying math of fingerprinting is the same.

Another point to note is that because of the focus on forensics, most of the work in this area so far has studied distinguishing different camera models. But there are some preliminary results on distinguishing ‘identical’ cameras, and it appears that the same techniques will work.

In more detail. Let’s look at what I think is the most well-known paper on sensor pattern noise fingerprinting, by Binghamton University researchers Jan Lukáš, Jessica Fridrich, and Miroslav Golja. [3] Here’s how it works: the first step is to build a reference pattern of a camera from multiple known images taken from it, so that later an unsourced image can be compared against these reference patterns. The authors suggest using at least 50, but for good measure, they use 320 in their experiments. In the forensics context, the investigator probably has physical possession of the camera and therefore can generate an unlimited number of images. We’ll discuss what this requirement means in the privacy-breach context later.

There are two steps to build the reference pattern. First, for each image, a denoising filter is applied, and the denoised image is subtracted from the original to leave only the noise. Next, the noise is averaged across all the reference images — this way the random noise cancels out and leaves the pattern noise.

Comparing a new image to a reference pattern, to test if it came from that camera, is easy: extract the noise from the test image, and compare this noise pixel-by-pixel with the reference noise. The noise from the test image includes random noise, so the match won’t be close to perfect, but nevertheless the correlation between the two noise patterns will be roughly equal to the contribution of pattern noise towards the total noise in the test image. On the other hand, if the test image didn’t come from the same camera, the correlation will be close to zero.

The authors experimented with nine cameras, of which two were from the same brand and model (Olympus Camedia C765). In addition, two other cameras had the same type of sensor. There was not a single error in their 2,700 tests, including those involving the two ‘identical’ cameras — in each case, the algorithm correctly identified which of the nine cameras a given image came from. By extrapolating the correlation curves, they conservatively estimate that for a False Accept Rate of 10-3, their method achieves a False Reject Rate of anywhere between 10-2 to 10-10 or even less depending on the camera model and camera settings.

The takeaway from this seems to be that distinguishing between cameras of different models can be performed with essentially perfect accuracy. Distinguishing between cameras of the same model also seems to have very high accuracy, but it is hard to generalize because of the small sample size.

Improvements. Impressive as the above numbers are, there are at least two major ways in which this result can, and has been improved. First, the Binghamton paper is focused on a specific signal, sensor noise. But there are several stages in image acquisition and processing pipeline in the camera, each of which could leave idiosyncratic effects on the image. This paper out of Turkey incorporates many such effects by considering all patterns of certain types that occur in the lower order (least significant) bits of the image, which seems like a rather powerful technique.

The effects other than sensor noise seem to help more with identifying the camera model than the specific device, but to the extent that the former is a component of the latter, it is useful. They achieve a 97.5% accuracy among 16 test cameras — but with cellphone cameras with pictures at a resolution of just 640×480.

Second is the effect of the scene itself on the noise. Denoising transformations are not perfect — sharp boundaries look like noise. The Binghamton researchers picked their denoising filter (a wavelet transform) to minimize this problem, but a recent paper by Chang-Tsun Li claims to do it better, and shows even better numerical results: with 6 cameras (all different models), accurate (over 99%) identification for image fragments cropped to just 256 x 512.

What does this mean for privacy? I said earlier that there is a duality between security and privacy, but let’s examine the relationship in more detail. In privacy-infringing applications like mass surveillance, the algorithm need not always produce an answer, and it can occasionally be wrong when it does. The penalty for errors is much lower. On the other hand, the matching algorithm in surveillance-like applications needs to handle a far larger number of candidate cameras. The key point is:

My intuition is that state-of-the-art techniques, configured slightly differently, should allow probabilistic deanonymization from among tens of thousands of different cameras. A Flickr or Picasa profile with a few dozen images should suffice to fingerprint a camera.[4] Combined with metadata such as location, this puts us within striking distance of Internet-scale source-camera identification from anonymous images. I really hope there will be some serious research on this question.

Finally, a word defenses. If you find yourself in a position where you wish to anonymously publicize a sensitive photograph you took, but your camera is publicly tied to your identity because you’ve previously shared pictures on social networks (and who hasn’t), how do you protect yourself?

Compressing the image is one possibility, because that destroys the ‘lower-order’ bits that fingerprinting crucially depends on. However, it would have to be way more aggressive than most camera defaults (JPEG quality factor ~60% according to one of the studies, whereas defaults are ~95%). A different strategy is rotating the image slightly in order to ‘desynchronize’ it, throwing off the fingerprint matching. An attack that defeats this will have to be much more sophisticated and will have a far higher error rate.

The deanonymization threat here is analogous to writing-style fingerprinting: there are simple defenses, albeit not foolproof, but sadly most users are unaware of the problem, let alone solutions.

[1] That was a bit simplified; mathematically, there is an additive component (dark signal nonuniformity) and a multiplicative component (photoresponse nonuniformity). The former is easy to correct for, and higher-end cameras do, but the latter isn’t.

[2] Much has been said about the tension between security and privacy at a social/legal/political level, but I’m making a relatively uncontroversial technical statement here.

[3] Fridrich is incidentally one of the pioneers of speedcubing i.e., speed-solving the Rubik’s cube.

[4] The Binghamton paper uses 320 images per camera for building a fingerprint (and recommends at least 50); the Turkey paper uses 100, and Li’s paper 50. I suspect that if more than one image taken from the unknown camera is available, then the number of reference images can be brought down by a corresponding factor.

To stay on top of future posts, subscribe to the RSS feed or follow me on Twitter or Google+.

Link Prediction by De-anonymization: How We Won the Kaggle Social Network Challenge

The title of this post is also the title of a new paper of mine with Elaine Shi and Ben Rubinstein. You can grab a PDF or a web-friendly HTML version generated using my Project Luther software.

A brief de-anonymization history. As early as the first version of my Netflix de-anonymization paper with Vitaly Shmatikov back in 2006, a colleague suggested that de-anonymization can in fact be used to game machine-learning contests—by simply “looking up” the attributes of de-anonymized users instead of predicting them. We off-handedly threw in paragraph in our paper discussing this possibility, and a New Scientist writer seized on it as an angle for her article.[1] Nothing came of it, of course; we had no interest in gaming the Netflix Prize.

During the years 2007-2009, Shmatikov and I worked on de-anonymizing social networks. The paper that resulted (PDF, HTML) showed how to take two graphs representing social networks and map the nodes to each other based on the graph structure alone—no usernames, no nothing. As you might imagine, this was a phenomenally harder technical challenge than our Netflix work. (Backstrom, Dwork and Kleinberg had previously published a paper on social network de-anonymization; the crucial difference was that we showed how to put two social network graphs together rather than search for a small piece of graph-structured auxiliary information in a large graph.)

The context for these two papers is that data mining on social networks—whether online social networks, telephone call networks, or any type of network of links between individuals—can be very lucrative. Social networking websites would benefit from outsourcing “anonymized” graphs to advertisers and such; we showed that the privacy guarantees are questionable-to-nonexistent since the anonymization can be reversed. No major social network has gone down this path (as far as I know), quite possibly in part because of the two papers, although smaller players often fly under the radar.

The Kaggle contest. Kaggle is a platform for machine learning competitions. They ran the IJCNN social network challenge to promote research on link prediction. The contest dataset was created by crawling an online social network—which was later revealed to be Flickr—and partitioning the obtained edge set into a large training set and a smaller test set of edges augmented with an equal number of fake edges. The challenge was to predict which edges were real and which were fake. Node identities in the released data were obfuscated.

There are many, many anonymized databases out there; I come across a new one every other week. I pick de-anonymization projects if it will advance the art significantly (yes, de-anonymization is still partly an art), or if it is fun. The Kaggle contest was a bit of both, and so when my collaborators invited me to join them, it was too juicy to pass up.

The Kaggle contest is actually much more suitable to game through de-anonymization than the Netflix Prize would have been. As we explain in the paper:

One factor that greatly affects both [the privacy risk and the risk of gaming]—in opposite directions—is whether the underlying data is already publicly available. If it is, then there is likely no privacy risk; however, it furnishes a ready source of high-quality data to game the contest.

The first step was to do our own crawl of Flickr; this turned out to be relatively easy. The two graphs (the Kaggle graph and our Flickr crawl), were 95% similar, as we were later able to determine. The difference is primarily due to Flickr users adding and deleting contacts between Kaggle’s crawl and ours. Armed with the auxiliary data, we set about the task of matching up the two graphs based on the structure. To clarify: our goal was to map the nodes in the Kaggle training and test dataset to real Flickr nodes. That would allow us to simply look up the pairs of nodes in the test set in the Flickr graph to see whether or not the edge exists.

De-anonymization. Our effort validated the broad strategy in my paper with Shmatikov, which consists of two steps: “seed finding” and “propagation.” In the former step we somehow de-anonymize a small number of nodes; in the latter step we use these as “anchors” to propagate the de-anonymization to more and more nodes. In this step the algorithm feeds on its own output.

Let me first describe propagation because it is simpler.[2] As the algorithm progresses, it maintains a (partial) mapping between the nodes in the true Flickr graph and the Kaggle graph. We iteratively try to extend the mapping as follows: pick an arbitrary as-yet-unmapped node in the Kaggle graph, find the “most similar” node in the Flickr graph, and if they are “sufficiently similar,” they get mapped to each other.

Similarity between a Kaggle node and a Flickr node is defined as cosine similarity between the already-mapped neighbors of the Kaggle node and the already-mapped neighbors of the Flickr node (nodes mapped to each other are treated as identical for the purpose of cosine comparison).

In the diagram, the blue nodes have already been mapped. The similarity between A and B is 2 / (√3·√3) = ⅔. Whether or not edges exist between A and A’ or B and B’ is irrelevant.

In the diagram, the blue nodes have already been mapped. The similarity between A and B is 2 / (√3·√3) = ⅔. Whether or not edges exist between A and A’ or B and B’ is irrelevant.

There are many heuristics that go into the “sufficiently similar” criterion, which are described in our paper. Due to the high percentage of common edges between the graphs, we were able to use a relatively pure form of the propagation algorithm; the one my paper with Shmatikov, in contast, was filled with lots more messy heuristics.

Those elusive seeds. Seed identification was far more challenging. In the earlier paper, we didn’t do seed identification on real graphs; we only showed it possible under certain models for error in auxiliary information. We used a “pattern-search” technique, as did the Backstrom et al paper uses a similar approach. It wasn’t clear whether this method would work, for reasons I won’t go into.

So we developed a new technique based on “combinatorial optimization.” At a high level, this means that instead of finding seeds one by one, we try to find them all at once! The first step is to find a set of k (we used k=20) nodes in the Kaggle graph and k nodes in our Flickr graph that are likely to correspond to each other (in some order); the next step is to find this correspondence.

The latter step is the hard one, and basically involves solving an NP-hard problem of finding a permutation that minimizes a certain weighting function. During the contest I basically stared at this page of numbers for a couple of hours, and then wrote down the mapping, which to my great relief turned out to be correct! But later we were able to show how to solve it in an automated and scalable fashion using simulated annealing, a well-known technique to approximately solve NP-hard problems for small enough problem sizes. This method is one of the main research contributions in our paper.

After carrying out seed identification, and then propagation, we had de-anonymized about 65% of the edges in the contest test set and the accuracy was about 95%. The main reason we didn’t succeed on the other third of the edges was that one or both the nodes had a very small number of contacts/friends, resulting in too little information to de-anonymize. Our task was far from over: combining de-anonymization with regular link prediction also involved nontrivial research insights, for which I will again refer you to the relevant section of the paper.

Lessons. The main question that our work raises is where this leaves us with respect to future machine-learning contests. One necessary step that would help a lot is to amend contest rules to prohibit de-anonymization and to require source code submission for human verification, but as we explain in the paper:

The loophole in this approach is the possibility of overfitting. While source-code verification would undoubtedly catch a contestant who achieved their results using de-anonymization alone, the more realistic threat is that of de-anonymization being used to bridge a small gap. In this scenario, a machine learning algorithm would be trained on the test set, the correct results having been obtained via de-anonymization. Since successful [machine learning] solutions are composites of numerous algorithms, and consequently have a huge number of parameters, it should be possible to conceal a significant amount of overfitting in this manner.

As with the privacy question, there are no easy answers. It has been over a decade since Latanya Sweeney’s work provided the first dramatic demonstration of the privacy problems with data anonymization; we still aren’t close to fixing things. I foresee a rocky road ahead for machine-learning contests as well. I expect I will have more to say about this topic on this blog; stay tuned.

[1] Amusingly, it was a whole year after that before anyone paid any attention to the privacy claims in that paper.

[2] The description is from my post on the Kaggle forum which also contains a few additional details.

To stay on top of future posts, subscribe to the RSS feed or follow me on Twitter.

The Linkability of Usernames: a Step Towards “Uber-Profiles”

Daniele Perito, Claude Castelluccia, Mohamed Ali Kaafar, and Pere Manils have a neat paper “How Unique and Traceable are Usernames?“ that addresses the following question:

Suppose you find the same username on different online services, what is the probability that these usernames refer to the same physical person?

The background for this investigation is that there is tremendous commercial value in linking together every piece of online information about an individual. While the academic study of constructing “uber-profiles” by linking social profiles is new (see Large Online Social Footprints—An Emerging Threat for another example), commercial firms have long been scraping profiles, aggregating them, and selling them on the grey market. Well-known public-facing aggregators such as Spokeo mainly use public records, but online profiles are quickly becoming part of the game.

Paul Ohm has even talked of a “database of ruin.” No matter what moral view one takes of this aggregation, the technical questions are fascinating.

The research on Record Linkage could fill an encyclopedia (see here for a survey) but most of it studies traditional data types such as names and addresses. This paper is thus a nice complement.

Usernames are particularly useful for carrying out linkage across different sites for two reasons:

- They are almost always available, especially on systems with pseudonymous accounts.

- When comparing two databases of profiles, usernames are a good way to quickly find candidate matches before exploring other attributes.

The mathematical heavy-lifting that the authors do is described by the following:

… we devise an analytical model to estimate the uniqueness of a user name, which can in turn be used to assign a probability that a single username, from two different online services, refers to the same user

and

we extend this model to cases when usernames are different across many online services … experimental data shows that users tend to choose closely related usernames on different services.

For example, my Google handle is ‘randomwalker’ and my twitter username is ‘random_walker’. Perito et al’s model can calculate how obscure the username ‘random_walker’ is, as well as how likely it is that ‘random_walker’ is a mutation of ‘randomwalker’, and come up with a combined score representing the probability that the two accounts refer to the same person. Impressive.

The authors also present experimental results. For example, they find that with a sample of 20,000 usernames drawn from a real dataset, their algorithms can find the right match about 60% of the time with a negligible error rate (i.e., 40% of the time it doesn’t produce a match, but it almost never errs.) That said, I find the main strength of the paper to be in the techniques more than the numbers.

Their models know all about the underlying natural language patterns, such as the fact that ‘random_walker’ is more meaningful than say ‘rand_omwalker’. This is achieved using what are called Markov models. I really like this class of techniques; I used Markov models many years ago in my paper on password cracking with Vitaly Shmatikov to model how people pick passwords.

The setting studied by Perito et al. is when two or more offline databases of usernames are available. Another question worth considering is determining the identity of a person behind a username via automated web searches. See my post on de-anonymizing Lending Club data for an empirical analysis of this.

There is a lot to be said about the psychology behind username choice. Ben Gross’s dissertation is a fascinating look at the choice of identifiers for self-representation. I myself am very attached to ‘randomwalker’; I’m not sure why that is.

A philosophical question related to this research is whether it is better to pick a unique username or a common one. The good thing about a unique username is that you stand out from the crowd. The bad thing about a unique username is that you stand out from the crowd. The question gets even more interesting (and consequential) if you’re balancing Googlability and anonymity in the context of naming your child, but that’s a topic for another day.

To stay on top of future posts, subscribe to the RSS feed or follow me on Twitter.

“Do Not Track” Explained

While the debate over online behavioral advertising and tracking has been going on for several years, it has recently intensified due to media coverage — for example, the Wall Street Journal What They Know series — and congressional and senate attention. The problems are clear; what can be done? Since purely technological solutions don’t seem to exist, it is time to consider legislative remedies.

One of the simplest and potentially most effective proposals is Do Not Track (DNT) which would give users a way to opt out of behavioral tracking universally. It is a way to move past the arms race between tracking technologies and defense mechanisms, focusing on the actions of the trackers rather than their tools. A variety of consumer groups and civil liberties organizations have expressed support for Do Not Track; Jon Leibowitz, chairman of the Federal Trade Comission has also indicated that DNT is on the agency’s radar.

Not a list. While Do Not Track is named in analogy to the Do Not Call registry, and the two are similar in spirit, they are very different in implementation. Early DNT proposals envisaged a registry of users, or a registry of tracking domains; both are needlessly complicated.

The user-registry approach has various shortcomings, at least one of which is fatal: there are no universally recognized user identifiers in use on the Web. Tracking is based on ad-hoc identification mechanisms, including cookies, that the ad networks deploy; by mandating a global, robust identifer, a user registry would in one sense exacerbate the very problem it attempts to solve. It also allows for little flexibility in allowing the user to configure DNT on a site-by-site basis.

The domain-registry approach involves mandating ad networks to register domains used for tracking with a central authority. Users would have the ability to download this list of domains and configure their browser to block them. This strategy has multiple problems, including: (i) the centralization required makes it fickle (ii) it is not clear how to block tracking domains without blocking ads altogether, since displaying an ad requires contacting the server that hosts it and (iii) it requires a level of consumer vigilance that is unreasonable to expect — for example, making sure that the domain list is kept up-to-date by every piece of installed web-enabled software.

The header approach. Today, consensus has been emerging around a far simpler DNT mechanism: have the browser signal to websites the user’s wish to opt out of tracking, specifially, via a HTTP header, such as “X-Do-Not-Track”. The header is sent out with every web request — this includes the page the user wishes to view, as well as each of the objects and scripts embedded within the page, including ads and trackers. It is trivial to implement in the web browser — indeed, there is already a Firefox add-on that implements a such a header.

The header-based approach also has the advantage of requiring no centralization or persistence. But in order for it to be meaningful, advertisers will have to respect the user’s preference not to be tracked. How would this be enforced? There is a spectrum of possibilities, ranging from self-regulation via the Network Advertising Initiative, to supervised self-regulation or “co-regulation,” to direct regulation.

At the very least, by standardizing the mechanism and meaning of opt-out, the DNT header promises a greatly simplified way for users to opt-out compared to the current cookie mechanism. Opt-out cookies are not robust, they are not supported by all ad networks, and are interpreted variously by those that do (no tracking vs. no behavioral advertising). The DNT header avoids these limitations and is also future-proof, in that a newly emergent ad network requires no new user action.

In the rest of this article, I will discuss the technical aspects of the header-based Do Not Track proposal. I will discuss four issues: the danger of a tiered web, how to define tracking, detecting violations, and finally user-empowerment tools. Throughout this discussion I will make a conceptual distinction between content providers or publishers (2nd party) and ad networks (3rd party).

Tiered web. Harlan Yu has raised a concern that DNT will lead to a tiered web in which sites will require users to disable DNT to access certain features or content. This type of restriction, if widespread, could substantially undermine the effectiveness of DNT.

There are two questions to address here: how likely is it that DNT will lead to a tiered web, and what, if anything, should be done to prevent it. The latter is a policy question — should DNT regulation prevent sites from tiering service — so I will restrict myself to the former.

Examining ad blocking allows us to predict how publishers, whether acting by themselves or due to pressure from advertisers, might react to DNT. From the user’s perspective, assuming DNT is implemented as a browser plug-in, ad blocking and DNT would be equivalent to install and, as necessary, disable for certain sites. And from the site’s perspective, ad blocking would result in a far greater decline in revenue than merely preventing behavioral ads. We should therefore expect that DNT will be at least as well tolerated by websites as ad blocking.

This is encouraging, since there are very few mainstream sites today that refuse to serve content to visitors with ad blocking enabled. Ad blocking is quite popular (indeed, the most popular extensions for both Firefox and Chrome are ad blockers). A few sites have experimented with tiering for ad-blocking users, but soon after rescinded due to user backlash. Public perception is a another factor that is likely to skew things even further in favor of DNT being well-tolerated: access to content in exchange for watching ads sounds like a much more palatable bargain than access in exchange for giving up privacy.

One might nonetheless speculate what a tiered web might look like if the ad industry, for whatever reason, decided to take a hard stance against DNT. It is once again easy to look to existing technologies, since we already have a tiered web: logged-in vs anonymous browsing. To reiterate, I do not believe that disabling DNT as a requirement for service will become anywhere near as prevalent as logging in as a requirement for service. I bring up login only to make the comforting observation there seems to be a healthy equilibrium between sites that require login always, some of the time, or never.

Defining tracking. It is beyond the scope of this article to give a complete definition of tracking. Any viable definition will necessarily be complex and comprise both technological and policy components. Eliminating loopholes and at the same time avoiding collateral damage — for example, to web analytics or click-fraud detection — will be a tricky proposition. What I will do instead is bring up a list of questions that will need to be addressed by any such definition:

- How are 2nd parties and 3rd parties delineated? Does DNT affect 2nd-party data collection in any manner, or only 3rd parties?

- Are only specific uses of tracking (primarily, targeted advertising) covered, or is all cross-site tracking covered by default, save possibly for specific exceptions?

- Under use-cases covered (i.e., prohibited) under DNT, can 3rd parties collect any individual data at all or should no data be collected? What about aggregate statistical data?

- If individual data can be collected, what categories? How long can it be retained, and for what purposes can it be used?

Detecting violations. The majority of ad networks will likely have an incentive to comply voluntarily with DNT. Nonetheless, it would be useful to build technological tools to detect tracking or behavioral advertising carried out in violation of DNT. It is important to note that since some types of tracking might be permitted by DNT, the tools in question are merely aids to determine when a further investigation is warranted.

There are a variety of passive (“fingerprinting”) and active (“tagging”) techniques to track users. Tagging is trivially detectable, since it requires modifying the state of the browser. As for fingerprinting, everything except for IP address and the user-agent string requires extra API calls and network activity that is in principle detectable. In summary, some crude tracking methods might be able to pass under the radar, while the finer grained and more reliable methods are detectable.

Detection of impermissible behavioral advertising is significantly easier. Intuitively, two users with DNT enabled should see roughly the same distribution of advertisements on the same web page, no matter how different their browsing history. In a single page view, there could be differences due to fluctuating inventories, A/B testing, and randomness, but in the aggregate, two DNT users should see the same ads. The challenge would be in automating as much of this testing process as possible.

User empowerment technologies. As noted earlier, there is already a Firefox add-on that implements a DNT HTTP header. It should be fairly straightforward to create one for each of the other major browsers. If for some reason this were not possible for a specific browser, an HTTP proxy (for instance, based on privoxy) is another viable solution, and it is independent of the browser.

A useful feature for the add-ons would be the ability to enable/disable DNT on a site-by-site basis. This capability could be very powerful, with the caveat that the user-interface needs to be carefully designed to avoid usability problems. The user could choose to allow all trackers on a given 2nd party domain, or allow tracking by a specific 3rd party on all domains, or some combination of these. One might even imagine lists of block/allow rules similar to the Adblock Plus filter lists, reflecting commonly held perceptions of trust.

To prevent fingerprinting, web browsers should attempt to minimize the amount of information leaked by web requests and APIs. There are 3 contexts in which this could be implemented: by default, as part of the existing private browsing mode, or in a new “anonymous browsing mode.” While minimizing information leakage benefits all users, it helps DNT users in particular by making it harder to implement silent tracking mechanisms. Both Mozilla and reportedly the Chrome team are already making serious efforts in this direction, and I would encourage other browser vendors to do the same.

A final avenue for user empowerment that I want to highlight is the possibility of achieving some form of browser history-based targeting without tracking. This gives me an opportunity to plug Adnostic, a Stanford-NYU collaborative effort which was developed with just this motivation. Our whitepaper describes the design as well as a prototype implementation.

This article is the result of several conversations with Jonathan Mayer and Lee Tien, as well as discussions with Peter Eckersley, Sid Stamm, John Mitchell, Dan Boneh and others. Elie Bursztein also deserves thanks for originally bringing DNT to my attention. Any errors, omissions and opinions are my own.

To stay on top of future posts, subscribe to the RSS feed or follow me on Twitter.

Women in Tech: How Anonymity Contributes to the Problem

Like Michael Arrington, I too have sat on the sidelines of the debate on women in tech. Unlike Michael Arrington, I did so because nobody asked for my opinion. There is, however, one aspect of the debate that I’m qualified to comment on.

The central issue seems to be whether the low participation rate of women in technology is due to a hostile environment in the tech industry (e.g., sexism, overt or covert) or due to external factors, whether genetic or social, that influence women to pick career paths other than technology without even giving it a shot.

Arrington thinks it’s the latter, and makes a strong case for his position. In response, many have pointed out various behaviors common in the tech industry that make it unappealing to women. Jessica B. Hamrick talks about rampant elitism which affects women disproportionately. What I’m more interested in today is Michelle Greer’s account of being viciously attacked for a relatively innocuous comment on Arrington’s post.

Let me come right out and say it: while I am a defender of the right to anonymous speech, I believe it has no place whatsoever in the vast majority of discussion forums. The reason is simple: there is something about anonymity that completely dismantles our evolved social norms and civility and makes us behave like apes. Not all of us, to be sure, but it only takes a few to ruin it for everyone. Or to put it in plainer terms:

There is no doubt that sexist comments online — the vast majority of them anonymous — contribute hugely to the problem of tech being a hostile environment for women. While there are rude comments directed at everyone, just look around if you need convincing that the ones that attack someone specifically for being female tend to be much more depraved. It is also true that rude behavior online is not limited to tech fields, but it creates more of a barrier there because online participation is essential for being relevant.

Here’s my suggestion to everyone who’d like to do something to make tech less hostile to women: perhaps the best return on your time that you can get is by making anonymous, unmoderated comments a thing of the past. Abolish it on your own sites, and write to other site admins and educate them about the importance of this issue. And when you see an uncivil comment, either educate or ignore the person, but try not to get enraged — you’d be feeding the troll.

Thanks to Ann Kilzer for reviewing a draft.

To stay on top of future posts, subscribe to the RSS feed or follow me on Twitter.

Yet Another Identity Stealing Bug. Will Creeping Normalcy be the Result?

Elie Bursztein points me to a “Cross Site URL Hijacking” attack which, among other things, allows a website to identify a visitor instantly (if they are using Firefox) by finding their Google and possibly Facebook IDs. Here is a live demo and here’s a paper.

For the security geeks, the attack works by exploiting a Firefox bug that allows a page in the attacker domain to infer URLs of pages in the target domain. If a page like target.com/home redirects to target.com/?user=[username] (which is quite common), the attacker can learn the username by requesting the page target.com/home in a script tag.

Let us put this attack in context. Stealing the identity of a web visitor should be familiar to readers of this blog. I’ve recently written about doing this via history stealing, then a bug in Google spreadsheets, and now we have this. While the spreadsheets bug was fixed, the history stealing vulnerability remains in most browsers. Will new bugs be found faster than existing ones getting fixed? The answer is probably yes.

Something that is of much more concern in the long run is Facebook’s instant personalization, which is basically like identity stealing, except it is a feature rather than a bug. Currently Facebook identities are available without user consent to only 3 partners (Yelp, Pandora and docs.com) but there will be inevitable competitive pressures both for Facebook to open this up to more websites as well as for other identity providers to offer a similar service.

Legitimate methods and hacks based on bugs are not entirely distinct. Two XSS attacks on yelp.com were found in quick succession either of which could have been exploited by a third (fourth?) party for identity stealing. Instant personalization (and similar attempts at an “identity layer”) greatly increase the chance of bugs that leak your identity to every website, authorized or not.

As identity-stealing bugs as well as identity-sharing features proliferate, the result is going to be creeping normalcy — users will get slowly inured to the idea that any website they visit might have their identity. And that will be a profound change for the way the web works. Of course, savvy users will know how to turn off the various tracking mechanisms, but most people will be left in the lurch.

We are still at the early stages of this shift. It is clear that it will have both good and ill effects. For example, people are much more civil when interacting under their real-life identity. For this reason, there is quite a clamor for identity. For instance, see News Sites Rethink Anonymous Online Comments and The Forces Align Against Anonymity. But like every change, this one is going to be hard to get used to.

To stay on top of future posts, subscribe to the RSS feed or follow me on Twitter.

Ubercookies Part 2: History Stealing meets the Social Web

Recap. In the previous article I introduced ubercookies — techniques that websites can use to de-anonymize visitors. I discussed a recent paper that shows how to use history stealing along with social network group membership information to find the visitor’s identity, and I promised a stronger variant of the attack.

The observation that led me to the attack I’m going to describe is simple: social networking isn’t just about social networks — the whole web has gone social. It’s a view that you quickly internalize if you spend any time hanging out with Silicon Valley web entrepreneurs :-)

Let’s break the underlying principle of the identity-stealing attack down to its essence:

A user leaves a footprint whenever their interaction with a specific web page is recorded publicly.

De-anonymization happens when the attacker can tie these footprints together into “trails” that can then be correlated with the user’s browser history. Efficiently querying the history to identify multiple points on the trail is a challenging problem to solve, but in principle de-anonymization is possible as long as the user’s actions on different web pages happen under the same identity.

Footprints can be tied together into trails as long as all the interactions happen under the same identity. There is no need for the interactions to be on the same website.

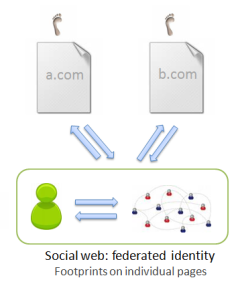

There are two major ways in which you can interact with arbitrary websites under a unified identity, both of which are defining principles of the social web. The first is federated identity, which means you can use the same identity provider wherever you go. This is achieved through OpenID and similar mechanisms like Facebook Connect. The second is social sharing: whenever you find something interesting anywhere on the web, you feed it back to your social network.

Now let’s examine the different types of interactions in more detail.

A taxonomy of interaction on the social web.

0. The pre-social web had no social networks and no delegated identity mechanism (except for the failed attempt by Microsoft called Passport). Users created new identities on each website, authenticated via site-specific usernames and passwords to each site separately. The footprints on different sites cannot be tied together; for practical purposes there are no footprints.

1. Social networks: affiliation. In social networks, users interact with social objects and leave footprints when the actions are public. The key type of interaction that is useful for de-anonymization is the expression of affiliation: this refers to not just the group memberships studied in the recent Wondracek et al. paper, but also includes

- memberships of fan pages on Facebook

- “interests” on Livejournal

- follow relationships and plain old friend relationships on Twitter and other public social networks

- subscriptions to Youtube channels

and so on.

All of these interactions, albeit very different from the user perspective, are fundamentally the same concept:

- you “add yourself” to or affiliate yourself with some object on a social network

- this action can be publicly observed

- you almost certainly visited a URL that identifies the object before adding it.

2. The social web: sharing. When you find a page you like — any page at all — you can import it or “share” it to your social stream, on Facebook, Twitter, Google Buzz, or a social bookmarking site like Delicious. The URL of the page is almost certainly in your history, and as long as your social stream is public, your interaction was recorded publicly.

3. The social web: federated identity. When you’re reading a blog post or article on the social web, you can typically comment on it, “like” it, favorite it, rate it, etc. You do all this under your Facebook, Google or other unified identity. These actions are often public and when they are, your footprint is left on the page.

A taxonomy of attacks

The three types of social interactions above give rise to a neat taxonomy of attacks. They involve progressively easier backend processing and progressively more sophisticated history search techniques on the front end. But the execution time on the front-end doesn’t increase, so it is a net win. Here’s a table:

| Type of interaction |

Backend processing |

Type of history URL |

Location of footprint |

| Affiliation | Crawling of social network | Object in a social network | In the social network |

| Sharing | Syndication of social stream(s) from social network | Any page | In the social network |

| Federated identity | None; optional crawling | Any page | On the page |

.

1. Better use of affiliation information. The Wondracek et al. paper makes use of only group membership. One natural reason to choose groups is that there are many groups that are large, with thousands of members, so it gives us a reasonably high chance that by throwing darts in the browser history we will actually hit a few groups that the user has visited. On the other hand, if we try to use the Facebook friend list, for example, hoping to find one of the user’s friends by random chance, it probably won’t work because most users have only a few hundred friends.

But wait: many Twitter users have thousands or even millions of followers. These are known as “hubs” in network theory. Clearly, the attack will work for any kind of hubs that have predictable URLs, and users on Twitter have even more predictable URLs (twitter.com/username) than groups on various networks. The attack will also work using Youtube favorites (which show up by default on the user’s public profile or channel page) and whatever other types of affiliation we might choose to exploit, as long as there are “hubs” — nodes in the graph with high degree. Already we can see that many more websites are vulnerable than the authors envisaged.

2. Syndicating the social stream: my Delicious experiment.

The interesting thing about the social stream is that you can syndicate the stream of interactions, rather than crawling. The reasons why syndication is much easier than crawling are more practical than theoretical. First, syndicated data is intended to be machine readable, and is therefore smaller as well as easier to parse compared to scraping web pages. Second, and more importantly, you might be to get a feed of the entire site-wide activity instead of syndicating each user’s activity stream separately. Delicious allows global syndication; Twitter plans to open this “firehose” feature to all developers soon.

Another advantage of the social stream is that everything is timestamped, so you can limit yourself to recent interactions, which are more likely to be in the user’s history.

Using the delicious.com dataset made available by DAI-labor (a log of all bookmarking activity on delicious.com over several years), I did a simulated experiment using 3 months worth of data: assuming that users keep their history around for 3 months, do in fact visit every link they post on delicious, how many users would a hypothetical history stealing attack be able to identify? I had a pretty good success rate: about 60% of the users who had shared at least 2 links in the 3-month period, or about 300,000 users. This takes at most 4000-5000 Javascript history queries.

Needless to say, once Twitter opens up its firehose, Twitter users (who are far more numerous than delicious users) would also be susceptible to the same technique.

This attack is not possible to fix via server-side URL randomization. It can also be made to work using Facebook, Google Buzz, and other sharing platforms, although the backend processing required won’t be as trivial (but probably no harder than in the original attack.)

3. A somewhat random walk through the history park.

And now for an approach that potentially requires no backend data collection, although it is speculative and I can’t guess what the success rate would be. The attack proceeds in several steps:

- Identify the user’s interests by testing if they’ve visited various popular topic-specific sites. Pick one of the user’s favorite topics. Incidentally, a commenter on my previous post notes he is building exactly this capability using topic pages on Wikipedia, also with the goal of de-anonymization!

- Grab a list of the top blogs on the topic you picked from one of the blog directories. Query the history to see which of these blogs the user reads frequently. It is even possible to estimate the level of interest in a blogs by looking at the fraction of the top/recent posts from that blog that the user has visited. Pick a blog that the user seems to visit regularly.

- Look for evidence of the user leaving comments on posts. For example, on Blogger, the comment page for a post has the URL http://www.blogger.com/comment.g?blogID=<blogid>&postID=<postid>.

- Once you find a couple of posts where it looks like the user made a comment, scrape the list of people who commented on it, find the intersection. (Even a single comment might suffice; as long as you have a list of candidates, you easily verify if it’s one of them by testing user-specific URLs. More below.)

- Depending on the blogging platform, you might even be able to deduce that the user responded (or intended to respond) to a specific comment. For example, On wordpress you have the pattern http://<blogname>.wordpress.com/<postname>/?replytocom=<commentid>#respond. If you get lucky and find one of those patterns, that makes things even easier.

If at first you don’t succeed, pick a different blog and repeat.

I suspect that the most practical method would be to use a syndicated activity stream from a social network, but also to use the heuristics presented above to more efficiently search through the history.

Epilogue: Identity.

Not only has there been a movement towards a small number of identity providers on the web, there are many aggregators out there that have sprung up in order to automatically find the connections between identities across the different identity providers, and also connect online identities to physical-world databases. As Pete Warden notes:

One of the least-understood developments of the last few years is the growth of databases of personal information linked to email addresses. Rapleaf is probably the leader in this field, but even Flickr lets companies search their API for users based on an email address.

I ran my email address through his demo script and it is quite clear that virtually all of my online identities have been linked together. This is getting to be the norm; as a consequence, once an attacker gets any kind of handle on you, they can go “identity hopping” and find out a whole lot more about you.

This is also the reason that once the attacker can make a reasonable guess at the visitor’s identity, it’s easy to verify the guess. Not only can they look for user-specific URLs in your history to confirm the guess (described in detail in the Wondracek et al. paper), but all your social streams on other sites can also be combined with your history to corroborate your identity.

Up next in the Ubercookies series: So that’s pretty bad. But it’s going to get worse before it can get better :-) In the next article, I will describe an entirely different attack strategy to get at your identity by exploiting a bug in a specific identity provider’s platform.

To stay on top of future posts, subscribe to the RSS feed or follow me on Twitter.