Posts tagged ‘regulation’

The Master Switch and the Centralization of the Internet

One of the most important trends in the recent evolution of the Internet has been the move towards centralization and closed platforms. I’m interested in this question in the context of social networks—analyzing why no decentralized social network has yet taken off, whether one ever will, and whether a decentralized social network is important for society and freedom. With this in mind, I read Tim Wu’s ‘The Master Switch: The Rise and Fall of Information Empires,’ a powerful book that will influence policy debates for some time to come. My review follows.

One of the most important trends in the recent evolution of the Internet has been the move towards centralization and closed platforms. I’m interested in this question in the context of social networks—analyzing why no decentralized social network has yet taken off, whether one ever will, and whether a decentralized social network is important for society and freedom. With this in mind, I read Tim Wu’s ‘The Master Switch: The Rise and Fall of Information Empires,’ a powerful book that will influence policy debates for some time to come. My review follows.

‘The Master Switch’ has two parts. The former discusses the history of communications media through the twentieth century and shows evidence for “The Cycle” of open innovation → closed monopoly → disruption. The latter, shorter part is more speculative and argues that the same fate will befall the Internet, absent aggressive intervention.

The first part of the book is unequivocally excellent. There are so many grand as well as little historical facts buried in there. Wu makes his case well for the claim that radio, telephony, film and television have all taken much the same path.

A point that Wu drives home repeatedly is that while free speech in law is always spoken of in the context of Governmental controls, the private entities that own or control the medium of speech play a far bigger role in practice in determining how much freedom of speech society has. In the U.S., we are used to regulating Governmental barriers to speech but not private ones, and a lot of the book is about exposing the problems with this approach.

An interesting angle the author takes is to look at the motives of the key men that shaped the “information industries” of the past. This is apposite given the enormous impact on history that each of these few has had, and I felt it added a layer of understanding compared to a purely factual account.

But let’s cut to the chase—the argument about the future of the Internet. I wasn’t sure whether I agreed or disagreed until I realized Wu is making two different claims, a weak one and a strong one, and does not separate them clearly.

The weak claim is simply that an open Internet is better for society in the long run than a closed one. Open and closed here are best understood via the exemplars of Google and Apple. Wu argues this reasonably well, and in any case not much argument is needed—most of us would consider it obvious on the face of it.

The strong claim, and the one that is used to justify intervention, is that a closed Internet will have such crippling effects on innovation and such chilling effects on free speech that it is our collective duty to learn from history and do something before the dystopian future materializes. This is where I think Wu’s argument falls short.

To begin with, Wu doesn’t have a clear reason why the Internet will follow the previous technologies, except, almost literally, “we can’t be sure it won’t.” He overstates the similarities and downplays the differences.

Second, I believe Wu doesn’t fully understand technology and the Internet in some key ways. Bizarrely, he appears to believe that the Internet’s predilection for decentralization is due to our cultural values rather than technological and business realities prevalent when these systems were designed.

Finally, Wu has a tendency to see things in black and white, in terms of good and evil, which I find annoying, and more importantly, oversimplified. He quotes this sentence approvingly: “Once we replace the personal computer with a closed-platform device such as the iPad, we replace freedom, choice and the free market with oppression, censorship and monopoly.” He also says that “no one denies that the future will be decided by one of two visions,” in the context of iOS and Android. It isn’t clear why he thinks they can’t coexist the way the Mac and PC have.

Regardless of whether one buys his dystopian prognostications, Wu’s paradigm of the “separations principle” is to be taken seriously. It is far broader than even net neutrality. There appear to be two key pillars: a separation of platforms and content, and limits on corporate structures to faciliate this—mainly vertical, but also horizontal, such as in the case of media conglomerates.

Interestingly, Wu wants the separations principle to be more of a societal-corporate norm than Governmental regulation. That said, he does call for more powers to the FCC, which is odd given that he is clear on the role that State actors have played in the past in enabling and condoning monopoly abuse:

Again and again in the histories I have recounted, the state has shown itself an inferior arbiter of what is good for the information industries. The federal government’s role in radio and television from the 1920s to the 1960s, for instance, was nothing short of a disgrace. In the service of chain broadcasting, it wrecked a vibrant, decentralized AM marketplace. At the behest of the ascendant radio industry, it blocked the arrival and prospects of FM radio, and then it put the brakes on television, reserving it for the NBC-CBS duopoly. Finally, from the 1950s through the 1960s, it did everything in its power to prevent cable television from challenging the primacy of the networks.

To his credit, Wu does seem to be aware of the contradiction, and appears to argue that the Government agencies can learn and change. It does seem like a stretch, however.

In summary, Wu deserves major kudos both for the historical treatment and for some very astute insights about the Internet. For example, in the last 2-3 years, Apple, Facebook, and Twitter have all made dramatic moves toward centralization, control and closed platforms. Wu seems to have foreseen this general trend more clearly than most techies did.[1] The book does have drawbacks, and I don’t agree that the Internet will go the way of past monopolies without intervention. It should be very interesting to see what moves Wu will make now that he will be advising the FTC.

[1] While the book was published in late 2010, I assume that Wu’s ideas are much older.

To stay on top of future posts, subscribe to the RSS feed or follow me on Twitter.

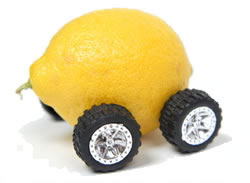

Privacy and the Market for Lemons, or How Websites Are Like Used Cars

I had a fun and engaging discussion on the “Paying With Data” panel at the South by Southwest conference; many thanks to my co-panelists Sara Marie Watson, Julia Angwin and Sam Yagan. I’d like to elaborate here on a concept that I briefly touched upon during the panel.

The market for lemons

In a groundbreaking paper 40 years ago, economist George Akerlof explained why so many used cars are lemons. The key is “asymmetric information:” the seller of a car knows more about its condition than the buyer does. This leads to “adverse selection” and a negative feedback spiral, with buyers tending to assume that there are hidden problems with cars on the market, which brings down prices and disincentivizes owners of good cars from trying to sell, further reinforcing the perception of bad quality.

In a groundbreaking paper 40 years ago, economist George Akerlof explained why so many used cars are lemons. The key is “asymmetric information:” the seller of a car knows more about its condition than the buyer does. This leads to “adverse selection” and a negative feedback spiral, with buyers tending to assume that there are hidden problems with cars on the market, which brings down prices and disincentivizes owners of good cars from trying to sell, further reinforcing the perception of bad quality.

In general, a market with asymmetric information is in danger of developing these characteristics: 1. buyers/consumers lack the ability to distinguish between high and low quality products 2. sellers/service providers lose the incentive to focus on quality and 3. the bad gradually crowds out the good since poor-quality products are cheaper to produce.

Information security and privacy suffer from this problem at least as much as used cars do.

The market for security products and certification

Bruce Schneier describes how various security products, such as USB drives, have turned into a lemon market. And in a fascinating paper, Ben Edelman analyzes data from TRUSTe certifications and comes to some startling conclusions [emphasis mine]:

Bruce Schneier describes how various security products, such as USB drives, have turned into a lemon market. And in a fascinating paper, Ben Edelman analyzes data from TRUSTe certifications and comes to some startling conclusions [emphasis mine]:

Widely-used online “trust” authorities issue certifications without substantial verification of recipients’ actual trustworthiness. This lax approach gives rise to adverse selection: The sites that seek and obtain trust certifications are actually less trustworthy than others. Using a new dataset on web site safety, I demonstrate that sites certified by the best-known authority, TRUSTe, are more than twice as likely to be untrustworthy as uncertified sites. This difference remains statistically and economically significant when restricted to “complex” commercial sites.

[…]

In a 2004 investigation after user complaints, TRUSTe gave Gratis Internet a clean bill of health. Yet subsequent New York Attorney General litigation uncovered Gratis’ exceptionally far-reaching privacy policy violations — selling 7.2 million users’ names, email addresses, street addresses, and phone numbers, despite a privacy policy exactly to the contrary.[…]

TRUSTe’s “Watchdog Reports” also indicate a lack of focus on enforcement. TRUSTe’s postings reveal that users continue to submit hundreds of complaints each month. But of the 3,416 complaints received since January 2003, TRUSTe concluded that not a single one required any change to any member’s operations, privacy statement, or privacy practices, nor did any complaint require any revocation or on-site audit. Other aspects of TRUSTe’s watchdog system also indicate a lack of diligence.

The market for personal data

In the realm of online privacy and data collection, the information asymmetry results from a serious lack of transparency around privacy policies. The website or service provider knows what happens to data that’s collected, but the user generally doesn’t. This arises due to several economic, architectural, cognitive and regulatory limitations/flaws:

In the realm of online privacy and data collection, the information asymmetry results from a serious lack of transparency around privacy policies. The website or service provider knows what happens to data that’s collected, but the user generally doesn’t. This arises due to several economic, architectural, cognitive and regulatory limitations/flaws:

- Each click is a transaction. As a user browses around the web, she interacts with dozens of websites and performs hundreds of actions per day. It is impossible to make privacy decisions with every click, or have a meaningful business relationship with each website, and hold them accountable for their data collection practices.

- Technology is hard to understand. Companies can often get away with meaningless privacy guarantees such as “anonymization” as a magic bullet, or “military-grade security,” a nonsensical term. The complexity of private browsing mode has led to user confusion and a false sense of safety.

- Privacy policies are filled with legalese and no one reads them, which means that disclosures made therein count for nothing. Yet, courts have upheld them as enforceable, disincentivizing websites from finding ways to communicate more clearly.

Collectively, these flaws have led to a well-documented market failure—there’s an arms race to use all means possible to entice users to give up more information, as well as to collect it passively through ever-more intrusive means. Self-regulatory organizations become captured by those they are supposed to regulate, and therefore their effectiveness quickly evaporates.

TRUSTe seems to be up to some shenanigans the online tracking space as well. As many have pointed out, the TRUSTe “Tracking Protection List” for Internet Explorer is in fact a whitelist, allowing about 4,000 domains—almost certainly from companies that have paid TRUSTe—to track the user. Worse, installing the TRUSTe list seems to override the blocking of a domain via another list!

Possible solutions

The obvious response to a market with asymmetric information is to correct the information asymmetry—for used cars, it involves taking it to a mechanic, and for online privacy, it is consumer education. Indeed, the What They Know series has done just that, and has been a big reason why we’re having this conversation today.

However, I am skeptical that the market can be fixed though consumer awareness alone. Many of the factors I’ve laid out above involve fundamental cognitive limitations, and while consumers may be well-educated about the general dangers prevalent online, it does not necessarily help them make fine-grained decisions.

It is for these reasons that some sort of Government regulation of the online data-gathering ecosystem seems necessary. Regulatory capture is of course still a threat, but less so than with self-regulation. Jonathan Mayer and I point out in our FTC Comment that ad industry self-regulation of online tracking has been a failure, and argue that the FTC must step in and enforce Do Not Track.

In summary, information asymmetry occurs in many markets related to security and privacy, leading in most cases to a spiraling decline in quality of products and services from a consumer perspective. Before we can talk about solutions, we must clearly understand why the market won’t fix itself, and in this post I have shown why that’s the case.

Update. TRUSTe president Fran Maier responds in the comments.

Update 2. Chris Soghoian points me to this paper analyzing privacy economics as a lemon market, which seems highly relevant.

Thanks to Jonathan Mayer for helpful feedback.

To stay on top of future posts, subscribe to the RSS feed or follow me on Twitter.

An Academic Wanders into Washington D.C.

I was on a Do Not Track panel in Washington D.C. last week. I spent a day in the city and had many informal conversations with policy people. It was fascinating to learn from close range how various parts of the Government work. If I could sum it up in a single phrase, it would be “so many smart people, so many systemic problems.”

What follows is obviously the account of an outsider, and I’m sure there are many nuances I’ve missed. That said, an outsider’s view can sometimes provide fresh perspective. So without further ado, here are some of my observations.

A deep chasm. Techies are by-and-large oblivious of what goes on in D.C., and have a poor mental picture of what regulators are or aren’t involved in. For example, I attended part of a talk on antitrust concerns around the Google search algorithm, and it blew my mind to realize that something that techies think of as their playground comes under serious regulatory scrutiny. (I hear the Google antitrust issue is really big in the EU, and the US is catching up.) Equally, the policy world is quite lacking in tech expertise.

Libertarian influence. While the libertarian party is not mainstream in the US, libertarian think tanks and lobbying groups exercise significant influence in D.C. While that gladdens me as a libertarian, one unfortunate thing that appears to be common to all think tanks is toeing the party line at the expense of critical thinking. I’m not sure there can be a market failure so complete that libertarian groups will consider acknowledging the need for some government intervention.

A new kind of panel. The panel I attended was very different from what I’m used to. In a scientific or technical panel, there is an underlying truth even if the participants may disagree about some things. Policy panels seem to be very different: each participant represents a group that has an entrenched position and there is no scope for actual debate or any possibility of changing one’s mind. The panel is instead a forum for the speakers to state their respective positions for the benefit of the media and the public. There is nothing wrong with this, but it took me a while to grasp.

Lobbyists. The American public is deeply concerned about the power of lobbyists. But lobbyists perform the valuable function of providing domain expertise to legislators and regulators. Of course, the problem is that they also have the role of trying to get favorable treatment for the industry groups they represent, and these roles cannot be disentangled.

The solution is to increase the power of the counterweights to lobbyists, i.e., consumer advocates, environmental groups etc. A loose analogy is that if we’re worried about wealthy individuals getting better treatment from the judicial system, the answer is not to get rid of lawyers, but to improve the quality of public prosecutors and defenders. I don’t know if the lobbyist imbalance can ever be completely eliminated, but I think it can be drastically mitigated.

A humble suggestion. Generalizing my experiences in the tech field, I suspect that the Government lacks domain expertise in virtually every area, hence the dependence on lobbyists. If only more academics were to get involved in policy, it seems to me that it would solve both problems mentioned above — it would address the lack of expertise and it would shift the balance of advocacy in favor of consumers. (There are certainly many law scholars involved in policy, but I’m thinking more of scientists and social scientists here — those who have domain knowledge.)

To reiterate, I believe that a greater involvement of academics in policy has the potential to hugely improve how government works. But how do we make that happen? I have a couple of suggestions. Government people seem to have a tendency to listen to whoever talks the loudest in Washington. Instead, they should make an effort to seek out people with actual expertise. Second, I hope academics will take into account benefits like increased visibility and consider moonlighting in policy circles.

Thanks to Joe Calandrino for comments on a draft.

To stay on top of future posts, subscribe to the RSS feed or follow me on Twitter.

“Do Not Track” Explained

While the debate over online behavioral advertising and tracking has been going on for several years, it has recently intensified due to media coverage — for example, the Wall Street Journal What They Know series — and congressional and senate attention. The problems are clear; what can be done? Since purely technological solutions don’t seem to exist, it is time to consider legislative remedies.

One of the simplest and potentially most effective proposals is Do Not Track (DNT) which would give users a way to opt out of behavioral tracking universally. It is a way to move past the arms race between tracking technologies and defense mechanisms, focusing on the actions of the trackers rather than their tools. A variety of consumer groups and civil liberties organizations have expressed support for Do Not Track; Jon Leibowitz, chairman of the Federal Trade Comission has also indicated that DNT is on the agency’s radar.

Not a list. While Do Not Track is named in analogy to the Do Not Call registry, and the two are similar in spirit, they are very different in implementation. Early DNT proposals envisaged a registry of users, or a registry of tracking domains; both are needlessly complicated.

The user-registry approach has various shortcomings, at least one of which is fatal: there are no universally recognized user identifiers in use on the Web. Tracking is based on ad-hoc identification mechanisms, including cookies, that the ad networks deploy; by mandating a global, robust identifer, a user registry would in one sense exacerbate the very problem it attempts to solve. It also allows for little flexibility in allowing the user to configure DNT on a site-by-site basis.

The domain-registry approach involves mandating ad networks to register domains used for tracking with a central authority. Users would have the ability to download this list of domains and configure their browser to block them. This strategy has multiple problems, including: (i) the centralization required makes it fickle (ii) it is not clear how to block tracking domains without blocking ads altogether, since displaying an ad requires contacting the server that hosts it and (iii) it requires a level of consumer vigilance that is unreasonable to expect — for example, making sure that the domain list is kept up-to-date by every piece of installed web-enabled software.

The header approach. Today, consensus has been emerging around a far simpler DNT mechanism: have the browser signal to websites the user’s wish to opt out of tracking, specifially, via a HTTP header, such as “X-Do-Not-Track”. The header is sent out with every web request — this includes the page the user wishes to view, as well as each of the objects and scripts embedded within the page, including ads and trackers. It is trivial to implement in the web browser — indeed, there is already a Firefox add-on that implements a such a header.

The header-based approach also has the advantage of requiring no centralization or persistence. But in order for it to be meaningful, advertisers will have to respect the user’s preference not to be tracked. How would this be enforced? There is a spectrum of possibilities, ranging from self-regulation via the Network Advertising Initiative, to supervised self-regulation or “co-regulation,” to direct regulation.

At the very least, by standardizing the mechanism and meaning of opt-out, the DNT header promises a greatly simplified way for users to opt-out compared to the current cookie mechanism. Opt-out cookies are not robust, they are not supported by all ad networks, and are interpreted variously by those that do (no tracking vs. no behavioral advertising). The DNT header avoids these limitations and is also future-proof, in that a newly emergent ad network requires no new user action.

In the rest of this article, I will discuss the technical aspects of the header-based Do Not Track proposal. I will discuss four issues: the danger of a tiered web, how to define tracking, detecting violations, and finally user-empowerment tools. Throughout this discussion I will make a conceptual distinction between content providers or publishers (2nd party) and ad networks (3rd party).

Tiered web. Harlan Yu has raised a concern that DNT will lead to a tiered web in which sites will require users to disable DNT to access certain features or content. This type of restriction, if widespread, could substantially undermine the effectiveness of DNT.

There are two questions to address here: how likely is it that DNT will lead to a tiered web, and what, if anything, should be done to prevent it. The latter is a policy question — should DNT regulation prevent sites from tiering service — so I will restrict myself to the former.

Examining ad blocking allows us to predict how publishers, whether acting by themselves or due to pressure from advertisers, might react to DNT. From the user’s perspective, assuming DNT is implemented as a browser plug-in, ad blocking and DNT would be equivalent to install and, as necessary, disable for certain sites. And from the site’s perspective, ad blocking would result in a far greater decline in revenue than merely preventing behavioral ads. We should therefore expect that DNT will be at least as well tolerated by websites as ad blocking.

This is encouraging, since there are very few mainstream sites today that refuse to serve content to visitors with ad blocking enabled. Ad blocking is quite popular (indeed, the most popular extensions for both Firefox and Chrome are ad blockers). A few sites have experimented with tiering for ad-blocking users, but soon after rescinded due to user backlash. Public perception is a another factor that is likely to skew things even further in favor of DNT being well-tolerated: access to content in exchange for watching ads sounds like a much more palatable bargain than access in exchange for giving up privacy.

One might nonetheless speculate what a tiered web might look like if the ad industry, for whatever reason, decided to take a hard stance against DNT. It is once again easy to look to existing technologies, since we already have a tiered web: logged-in vs anonymous browsing. To reiterate, I do not believe that disabling DNT as a requirement for service will become anywhere near as prevalent as logging in as a requirement for service. I bring up login only to make the comforting observation there seems to be a healthy equilibrium between sites that require login always, some of the time, or never.

Defining tracking. It is beyond the scope of this article to give a complete definition of tracking. Any viable definition will necessarily be complex and comprise both technological and policy components. Eliminating loopholes and at the same time avoiding collateral damage — for example, to web analytics or click-fraud detection — will be a tricky proposition. What I will do instead is bring up a list of questions that will need to be addressed by any such definition:

- How are 2nd parties and 3rd parties delineated? Does DNT affect 2nd-party data collection in any manner, or only 3rd parties?

- Are only specific uses of tracking (primarily, targeted advertising) covered, or is all cross-site tracking covered by default, save possibly for specific exceptions?

- Under use-cases covered (i.e., prohibited) under DNT, can 3rd parties collect any individual data at all or should no data be collected? What about aggregate statistical data?

- If individual data can be collected, what categories? How long can it be retained, and for what purposes can it be used?

Detecting violations. The majority of ad networks will likely have an incentive to comply voluntarily with DNT. Nonetheless, it would be useful to build technological tools to detect tracking or behavioral advertising carried out in violation of DNT. It is important to note that since some types of tracking might be permitted by DNT, the tools in question are merely aids to determine when a further investigation is warranted.

There are a variety of passive (“fingerprinting”) and active (“tagging”) techniques to track users. Tagging is trivially detectable, since it requires modifying the state of the browser. As for fingerprinting, everything except for IP address and the user-agent string requires extra API calls and network activity that is in principle detectable. In summary, some crude tracking methods might be able to pass under the radar, while the finer grained and more reliable methods are detectable.

Detection of impermissible behavioral advertising is significantly easier. Intuitively, two users with DNT enabled should see roughly the same distribution of advertisements on the same web page, no matter how different their browsing history. In a single page view, there could be differences due to fluctuating inventories, A/B testing, and randomness, but in the aggregate, two DNT users should see the same ads. The challenge would be in automating as much of this testing process as possible.

User empowerment technologies. As noted earlier, there is already a Firefox add-on that implements a DNT HTTP header. It should be fairly straightforward to create one for each of the other major browsers. If for some reason this were not possible for a specific browser, an HTTP proxy (for instance, based on privoxy) is another viable solution, and it is independent of the browser.

A useful feature for the add-ons would be the ability to enable/disable DNT on a site-by-site basis. This capability could be very powerful, with the caveat that the user-interface needs to be carefully designed to avoid usability problems. The user could choose to allow all trackers on a given 2nd party domain, or allow tracking by a specific 3rd party on all domains, or some combination of these. One might even imagine lists of block/allow rules similar to the Adblock Plus filter lists, reflecting commonly held perceptions of trust.

To prevent fingerprinting, web browsers should attempt to minimize the amount of information leaked by web requests and APIs. There are 3 contexts in which this could be implemented: by default, as part of the existing private browsing mode, or in a new “anonymous browsing mode.” While minimizing information leakage benefits all users, it helps DNT users in particular by making it harder to implement silent tracking mechanisms. Both Mozilla and reportedly the Chrome team are already making serious efforts in this direction, and I would encourage other browser vendors to do the same.

A final avenue for user empowerment that I want to highlight is the possibility of achieving some form of browser history-based targeting without tracking. This gives me an opportunity to plug Adnostic, a Stanford-NYU collaborative effort which was developed with just this motivation. Our whitepaper describes the design as well as a prototype implementation.

This article is the result of several conversations with Jonathan Mayer and Lee Tien, as well as discussions with Peter Eckersley, Sid Stamm, John Mitchell, Dan Boneh and others. Elie Bursztein also deserves thanks for originally bringing DNT to my attention. Any errors, omissions and opinions are my own.

To stay on top of future posts, subscribe to the RSS feed or follow me on Twitter.