Posts tagged ‘re-identification’

One more re-identification demonstration, and then I’m out

What should we do about re-identification? Back when I started this blog in grad school seven years ago, I subtitled it “The end of anonymous data and what to do about it,” anticipating that I’d work on re-identification demonstrations as well as technical and policy solutions. As it turns out, I’ve looked at the former much more often than the latter. That said, my recent paper A Precautionary Approach to Big Data Privacy with Joanna Huey and Ed Felten tackles the “what to do about it” question head-on. We present a comprehensive set of recommendations for policy makers and practitioners.

One more re-identification demonstration, and then I’m out. Overall, I’ve moved on in terms of my research interests to other topics like web privacy and cryptocurrencies. That said, there’s one fairly significant re-identification demonstration I hope to do some time this year. This is something I started in grad school, obtained encouraging preliminary results on, and then put on the back burner. Stay tuned.

Machine learning and re-identification. I’ve argued that the algorithms used in re-identification turn up everywhere in computer science. I’m still interested in these algorithms from this broader perspective. My recent collaboration on de-anonymizing programmers using coding style is a good example. It uses more sophisticated machine learning than most of my earlier work on re-identification, and the potential impact is more in forensics than in privacy.

Privacy and ethical issues in big data. There’s a new set of thorny challenges in big data — privacy-violating inferences, fairness of machine learning, and ethics in general. I’m collaborating with technology ethics scholar Solon Barocas on these topics. Here’s an abstract we wrote recently, just to give you a flavor of what we’re doing:

How to do machine learning ethically

Every now and then, a story about inference goes viral. You may remember the one about Target advertising to customers who were determined to be pregnant based on their shopping patterns. The public reacts by showing deep discomfort about the power of inference and says it’s a violation of privacy. On the other hand, the company in question protests that there was no wrongdoing — after all, they had only collected innocuous information on customers’ purchases and hadn’t revealed that data to anyone else.

This common pattern reveals a deep disconnect between what people seem to care about when they cry privacy foul and the way the protection of privacy is currently operationalized. The idea that companies shouldn’t make inferences based on data they’ve legally and ethically collected might be disturbing and confusing to a data scientist.

And yet, we argue that doing machine learning ethically means accepting and adhering to boundaries on what’s OK to infer or predict about people, as well as how learning algorithms should be designed. We outline several categories of inference that run afoul of privacy norms. Finally, we explain why ethical considerations sometimes need to be built in at the algorithmic level, rather than being left to whoever is deploying the system. While we identify a number of technical challenges that we don’t quite know how to solve yet, we also provide some guidance that will help practitioners avoid these hazards.

To stay on top of future posts, subscribe to the RSS feed or follow me on Twitter.

Reidentification as Basic Science

This essay originally appeared on the Bill of Health blog as part of a conversation on the law, ethics and science of reidentification demonstrations.

What really drives reidentification researchers? Do we publish these demonstrations to alert individuals to privacy risks? To shame companies? For personal glory? If our goal is to improve privacy, are we doing it in the best way possible?

In this post I’d like to discuss my own motivations as a reidentification researcher, without speaking for anyone else. Certainly I care about improving privacy outcomes, in the sense of making sure that companies, governments and others don’t get away with mathematically unsound promises about the privacy of consumers’ data. But there is a quite different goal I care about at least as much: reidentification algorithms. These algorithms are my primary object of study, and so I see reidentification research partly as basic science.

Let me elaborate on why reidentification algorithms are interesting and important. First, they yield fundamental insights about people — our interests, preferences, behavior, and connections — as reflected in the datasets collected about us. Second, as is the case with most basic science, these algorithms turn out to have a variety of applications other than reidentification, both for good and bad. Let us consider some of these.

First and foremost, reidentification algorithms are directly applicable in digital forensics and intelligence. Analyzing the structure of a terrorist network (say, based on surveillance of movement patterns and meetings) to assign identities to nodes is technically very similar to social network deanonymization. A reidentification researcher that I know who is a U.S. citizen tells me he has been contacted more than once by intelligence agencies to apply his expertise to their data.

Homer et al’s work on identifying individuals in DNA mixtures is another great example of how forensics algorithms are inextricably linked to privacy-infringing applications. In addition to DNA and network structure, writing style and location trails are other attributes that have been utilized both in reidentification and forensics.

It is not a coincidence that the reidentification literature often uses the word “fingerprint” — this body of work has generalized the notion of a fingerprint beyond physical attributes to a variety of other characteristics. Just like physical fingerprints, there are good uses and bad, but regardless, finding generalized fingerprints is a contribution to human knowledge. A fundamental question is how much information (i.e., uniqueness) there is in each of these types of attributes or characteristics. Reidentification research is gradually helping answer this question, but much remains unknown.

It is not only people that are fingerprintable — so are various physical devices. A wonderful set of (unrelated) research papers has shown that many types of devices, objects, and software systems, even supposedly identical ones, are have unique fingerprints: blank paper, digital cameras, RFID tags, scanners and printers, and web browsers, among others. The techniques are similar to reidentification algorithms, and once again straddle security-enhancing and privacy-infringing applications.

Even more generally, reidentification algorithms are classification algorithms for the case when the number of classes is very large. Classification algorithms categorize observed data into one of several classes, i.e., categories. They are at the core of machine learning, but typical machine-learning applications rarely need to consider more than several hundred classes. Thus, reidentification science is helping develop our knowledge of how best to extend classification algorithms as the number of classes increases.

Moving on, research on reidentification and other types of “leakage” of information reveals a problem with the way data-mining contests are run. Most commonly, some elements of a dataset are withheld, and contest participants are required to predict these unknown values. Reidentification allows contestants to bypass the prediction process altogether by simply “looking up” the true values in the original data! For an example and more elaborate explanation, see this post on how my collaborators and I won the Kaggle social network challenge. Demonstrations of information leakage have spurred research on how to design contests without such flaws.

If reidentification can cause leakage and make things messy, it can also clean things up. In a general form, reidentification is about connecting common entities across two different databases. Quite often in real-world datasets there is no unique identifier, or it is missing or erroneous. Just about every programmer who does interesting things with data has dealt with this problem at some point. In the research world, William Winkler of the U.S. Census Bureau has authored a survey of “record linkage”, covering well over a hundred papers. I’m not saying that the high-powered machinery of reidentification is necessary here, but the principles are certainly useful.

In my brief life as an entrepreneur, I utilized just such an algorithm for the back-end of the web application that my co-founders and I built. The task in question was to link a (musical) artist profile from last.fm to the corresponding Wikipedia article based on discography information (linking by name alone fails in any number of interesting ways.) On another occasion, for the theory of computing blog aggregator that I run, I wrote code to link authors of papers uploaded to arXiv to their DBLP profiles based on the list of coauthors.

There is more, but I’ll stop here. The point is that these algorithms are everywhere.

If the algorithms are the key, why perform demonstrations of privacy failures? To put it simply, algorithms can’t be studied in a vacuum; we need concrete cases to test how well they work. But it’s more complicated than that. First, as I mentioned earlier, keeping the privacy conversation intellectually honest is one of my motivations, and these demonstrations help. Second, in the majority of cases, my collaborators and I have chosen to examine pairs of datasets that were already public, and so our work did not uncover the identities of previously anonymous subjects, but merely helped to establish that this could happen in other instances of “anonymized” data sharing.

Third, and I consider this quite unfortunate, reidentification results are taken much more seriously if researchers do uncover identities, which naturally gives us an incentive to do so. I’ve seen this in my own work — the Netflix paper is the most straightforward and arguably the least scientifically interesting reidentification result that I’ve co-authored, and yet it received by far the most attention, all because it was carried out on an actual dataset published by a company rather than demonstrated hypothetically.

My primary focus on the fundamental research aspect of reidentification guides my work in an important way. There are many, many potential targets for reidentification — despite all the research, data holders often (rationally) act like nothing has changed and continue to make data releases with “PII” removed. So which dataset should I pick to work on?

Focusing on the algorithms makes it a lot easier. One of my criteria for picking a reidentification question to work on is that it must lead to a new algorithm. I’m not at all saying that all reidentification researchers should do this, but for me it’s a good way to maximize the impact I can hope for from my research, while minimizing controversies about the privacy of the subjects in the datasets I study.

I hope this post has given you some insight into my goals, motivations, and research outputs, and an appreciation of the fact that there is more to reidentification algorithms than their application to breaching privacy. It will be useful to keep this fact in the back of our minds as we continue the conversation on the ethics of reidentification.

Thanks to Vitaly Shmatikov for reviewing a draft.

To stay on top of future posts, subscribe to the RSS feed or follow me on Twitter or Google+.

New Developments in Deanonymization

This post is a roundup of developments in deanonymization in the last few months. Let’s start with two stories relating to how a malicious website can silently discover the identity of a visitor, which is an insidious type of privacy breach that I’ve written about quite a bit (1, 2, 3, 4, 5, 6).

Firefox bug exposed your identity. The first is a vulnerability resulting from a Firefox bug in the implementation of functions like exec and test. The bug allows a website to learn the URL of an embedded iframe from some other domain. How can this lead to uncovering the visitor’s identity? Because twitter.com/lists redirects to twitter.com/<username>/lists. This allows a malicious website to open a hidden iframe pointing to twitter.com/lists, query the URL after redirection, and learn the visitor’s Twitter handle (if they are logged in). [1,2]

This is very similar to a previous bug in Firefox that led to the same type of vulnerability. The URL redirect that was exploited there was google.com/profiles/me → user-specific URL. It would be interesting to find and document all such generic-URL → user-specific-URL redirects in major websites. I have a feeling this won’t be the last time such redirection will be exploited.

Visitor deanonymization in the wild. The second story is an example of visitor deanonymization happening in the wild. It appears that the technique utilizes a tracking cookie from a third-party domain to which the visitor previously gave their email and other info., in other words, #3 in my five-fold categorization of ways in which identity can be attached to browsing logs.

I don’t consider this instance to be particularly significant — I’m sure there are other implementations in the wild — and it’s not technically novel, but this is the first time as far as I know that it’s gotten significant attention from the public, even if only in tech circles. I see this as a first step in a feedback loop of changing expectations about online anonymity emboldening more sites to deanonymize visitors, thus further lowering the expectation of privacy.

Deanonymization of mobility traces. Let’s move on to the more traditional scenario of deanonymization of a dataset by combining it with an auxiliary, public dataset which has users’ identities. Srivatsa and Hicks have a new paper with demonstrations of deanonymization of mobility traces, i.e., logs of users’ locations over time. They use public social networks as auxiliary information, based on the insight that pairs of people who are friends are more likely to meet with each other physically. The deanonymization of Bluetooth contact traces of attendees of a conference based on their DBLP co-authorship graph is cute.

This paper adds to the growing body of evidence that anonymization of location traces can be reversed, even if the data is obfuscated by introducing errors (noise).

So many datasets, so little time. Speaking of mobility traces, Jason Baldridge points me to a dataset containing mobility traces (among other things) of 5 million “anonymous” users in the Ivory Coast recently released by telecom operator Orange. A 250-word research proposal is required to get access to the data, which is much better from a privacy perspective than a 1-click download. It introduces some accountability without making it too onerous to get the data.

In general, the incentive for computer science researchers to perform practical demonstrations of deanonymization has diminished drastically. Our goal has always been to showcase new techniques and improve our understanding of what’s possible, and not to name and shame. Even if the Orange dataset were more easily downloadable, I would think that the incentive for deanonymization researchers would be low, now that the Srivatsa and Hicks paper exists and we know for sure that mobility traces can be deanonymized, even though the experiments in the paper are on a far smaller scale.

Head in the sand: rational?! I gave a talk at a privacy workshop recently taking a look back at how companies have reacted to deanonymization research. My main point was that there’s a split between the take-your-data-and-go-home approach (not releasing data because of privacy concerns) and the head-in-the-sand approach (pretending the problem doesn’t exist). Unfortunately but perhaps unsurprisingly, there has been very little willingness to take a middle ground, engaging with data privacy researchers and trying to adopt technically sophisticated solutions.

Interestingly, head-in-the-sand might be rational from companies’ point of view. On the one hand, researchers don’t have the incentive for deanonymization anymore. On the other hand, if malicious entities do it, naturally they won’t talk about it in public, so there will be no PR fallout. Regulators have not been very aggressive in investigating anonymized data releases in the absence of a public outcry, so that may be a negligible risk.

Some have questioned whether deanonymization in the wild is actually happening. I think it’s a bit silly to assume that it isn’t, given the economic incentives. Of course, I can’t prove this and probably never can. No company doing it will publicly talk about it, and the privacy harms are so indirect that tying them to a specific data release is next to impossible. I can only offer anecdotes to explain my position: I have been approached multiple times by organizations who wanted me to deanonymize a database they’d acquired, and I’ve had friends in different industries mention casually that what they do on a daily basis to combine different databases together is essentially deanonymization.

[1] For a discussion of why a social network profile is essentially equivalent to an identity, see here and the epilog here.

[2] Mozilla pulled Firefox 16 as a result and quickly fixed the bug.

To stay on top of future posts, subscribe to the RSS feed or follow me on Twitter or Google+.

Link Prediction by De-anonymization: How We Won the Kaggle Social Network Challenge

The title of this post is also the title of a new paper of mine with Elaine Shi and Ben Rubinstein. You can grab a PDF or a web-friendly HTML version generated using my Project Luther software.

A brief de-anonymization history. As early as the first version of my Netflix de-anonymization paper with Vitaly Shmatikov back in 2006, a colleague suggested that de-anonymization can in fact be used to game machine-learning contests—by simply “looking up” the attributes of de-anonymized users instead of predicting them. We off-handedly threw in paragraph in our paper discussing this possibility, and a New Scientist writer seized on it as an angle for her article.[1] Nothing came of it, of course; we had no interest in gaming the Netflix Prize.

During the years 2007-2009, Shmatikov and I worked on de-anonymizing social networks. The paper that resulted (PDF, HTML) showed how to take two graphs representing social networks and map the nodes to each other based on the graph structure alone—no usernames, no nothing. As you might imagine, this was a phenomenally harder technical challenge than our Netflix work. (Backstrom, Dwork and Kleinberg had previously published a paper on social network de-anonymization; the crucial difference was that we showed how to put two social network graphs together rather than search for a small piece of graph-structured auxiliary information in a large graph.)

The context for these two papers is that data mining on social networks—whether online social networks, telephone call networks, or any type of network of links between individuals—can be very lucrative. Social networking websites would benefit from outsourcing “anonymized” graphs to advertisers and such; we showed that the privacy guarantees are questionable-to-nonexistent since the anonymization can be reversed. No major social network has gone down this path (as far as I know), quite possibly in part because of the two papers, although smaller players often fly under the radar.

The Kaggle contest. Kaggle is a platform for machine learning competitions. They ran the IJCNN social network challenge to promote research on link prediction. The contest dataset was created by crawling an online social network—which was later revealed to be Flickr—and partitioning the obtained edge set into a large training set and a smaller test set of edges augmented with an equal number of fake edges. The challenge was to predict which edges were real and which were fake. Node identities in the released data were obfuscated.

There are many, many anonymized databases out there; I come across a new one every other week. I pick de-anonymization projects if it will advance the art significantly (yes, de-anonymization is still partly an art), or if it is fun. The Kaggle contest was a bit of both, and so when my collaborators invited me to join them, it was too juicy to pass up.

The Kaggle contest is actually much more suitable to game through de-anonymization than the Netflix Prize would have been. As we explain in the paper:

One factor that greatly affects both [the privacy risk and the risk of gaming]—in opposite directions—is whether the underlying data is already publicly available. If it is, then there is likely no privacy risk; however, it furnishes a ready source of high-quality data to game the contest.

The first step was to do our own crawl of Flickr; this turned out to be relatively easy. The two graphs (the Kaggle graph and our Flickr crawl), were 95% similar, as we were later able to determine. The difference is primarily due to Flickr users adding and deleting contacts between Kaggle’s crawl and ours. Armed with the auxiliary data, we set about the task of matching up the two graphs based on the structure. To clarify: our goal was to map the nodes in the Kaggle training and test dataset to real Flickr nodes. That would allow us to simply look up the pairs of nodes in the test set in the Flickr graph to see whether or not the edge exists.

De-anonymization. Our effort validated the broad strategy in my paper with Shmatikov, which consists of two steps: “seed finding” and “propagation.” In the former step we somehow de-anonymize a small number of nodes; in the latter step we use these as “anchors” to propagate the de-anonymization to more and more nodes. In this step the algorithm feeds on its own output.

Let me first describe propagation because it is simpler.[2] As the algorithm progresses, it maintains a (partial) mapping between the nodes in the true Flickr graph and the Kaggle graph. We iteratively try to extend the mapping as follows: pick an arbitrary as-yet-unmapped node in the Kaggle graph, find the “most similar” node in the Flickr graph, and if they are “sufficiently similar,” they get mapped to each other.

Similarity between a Kaggle node and a Flickr node is defined as cosine similarity between the already-mapped neighbors of the Kaggle node and the already-mapped neighbors of the Flickr node (nodes mapped to each other are treated as identical for the purpose of cosine comparison).

In the diagram, the blue nodes have already been mapped. The similarity between A and B is 2 / (√3·√3) = ⅔. Whether or not edges exist between A and A’ or B and B’ is irrelevant.

In the diagram, the blue nodes have already been mapped. The similarity between A and B is 2 / (√3·√3) = ⅔. Whether or not edges exist between A and A’ or B and B’ is irrelevant.

There are many heuristics that go into the “sufficiently similar” criterion, which are described in our paper. Due to the high percentage of common edges between the graphs, we were able to use a relatively pure form of the propagation algorithm; the one my paper with Shmatikov, in contast, was filled with lots more messy heuristics.

Those elusive seeds. Seed identification was far more challenging. In the earlier paper, we didn’t do seed identification on real graphs; we only showed it possible under certain models for error in auxiliary information. We used a “pattern-search” technique, as did the Backstrom et al paper uses a similar approach. It wasn’t clear whether this method would work, for reasons I won’t go into.

So we developed a new technique based on “combinatorial optimization.” At a high level, this means that instead of finding seeds one by one, we try to find them all at once! The first step is to find a set of k (we used k=20) nodes in the Kaggle graph and k nodes in our Flickr graph that are likely to correspond to each other (in some order); the next step is to find this correspondence.

The latter step is the hard one, and basically involves solving an NP-hard problem of finding a permutation that minimizes a certain weighting function. During the contest I basically stared at this page of numbers for a couple of hours, and then wrote down the mapping, which to my great relief turned out to be correct! But later we were able to show how to solve it in an automated and scalable fashion using simulated annealing, a well-known technique to approximately solve NP-hard problems for small enough problem sizes. This method is one of the main research contributions in our paper.

After carrying out seed identification, and then propagation, we had de-anonymized about 65% of the edges in the contest test set and the accuracy was about 95%. The main reason we didn’t succeed on the other third of the edges was that one or both the nodes had a very small number of contacts/friends, resulting in too little information to de-anonymize. Our task was far from over: combining de-anonymization with regular link prediction also involved nontrivial research insights, for which I will again refer you to the relevant section of the paper.

Lessons. The main question that our work raises is where this leaves us with respect to future machine-learning contests. One necessary step that would help a lot is to amend contest rules to prohibit de-anonymization and to require source code submission for human verification, but as we explain in the paper:

The loophole in this approach is the possibility of overfitting. While source-code verification would undoubtedly catch a contestant who achieved their results using de-anonymization alone, the more realistic threat is that of de-anonymization being used to bridge a small gap. In this scenario, a machine learning algorithm would be trained on the test set, the correct results having been obtained via de-anonymization. Since successful [machine learning] solutions are composites of numerous algorithms, and consequently have a huge number of parameters, it should be possible to conceal a significant amount of overfitting in this manner.

As with the privacy question, there are no easy answers. It has been over a decade since Latanya Sweeney’s work provided the first dramatic demonstration of the privacy problems with data anonymization; we still aren’t close to fixing things. I foresee a rocky road ahead for machine-learning contests as well. I expect I will have more to say about this topic on this blog; stay tuned.

[1] Amusingly, it was a whole year after that before anyone paid any attention to the privacy claims in that paper.

[2] The description is from my post on the Kaggle forum which also contains a few additional details.

To stay on top of future posts, subscribe to the RSS feed or follow me on Twitter.

Myths and Fallacies of “Personally Identifiable Information”

I have a new paper (PDF) with Vitaly Shmatikov in the June issue of the Communications of the ACM. We talk about the technical and legal meanings of “personally identifiable information” (PII) and argue that the term means next to nothing and must be greatly de-emphasized, if not abandoned, in order to have a meaningful discourse on data privacy. Here are the main points:

The notion of PII is found in two very different types of laws: data breach notification laws and information privacy laws. In the former, the spirit of the term is to encompass information that could be used for identity theft. We have absolutely no issue with the sense in which PII is used in this category of laws.

On the other hand, in laws and regulations aimed at protecting consumer privacy, the intent is to compel data trustees who want to share or sell data to scrub “PII” in a way that prevents the possibility of re-identification. As readers of this blog know, this is essentially impossible to do in a foolproof way without losing the utility of the data. Our paper elaborates on this and explains why “PII” has no technical meaning, given that virtually any non-trivial information can potentially be used for re-identification.

What we are gunning after is the get-out-of-jail-free card, a.k.a. “safe harbor,” particularly in the HIPAA (health information privacy) context. In current practice, data owners can absolve themselves of responsibility by performing a syntactic “de-identification” of the data (although this isn’t the spirit of the law). Even your genome is not considered identifying!

Meaningful privacy protection is possible if account is taken of the specific types of computations that will be performed on the data (e.g., collaborative filtering, fraud detection, etc.). It is virtually impossible to guarantee privacy by considering the data alone, without carefully defining and analyzing its desired uses.

We are well aware of the burden that this imposes on data trustees, many of whom find even the current compliance requirements onerous. Often there is no one available who understands computer science or programming, and there is no budget to hire someone who does. That is certainly a conundrum, and it isn’t going to be fixed overnight. However, the current situation is a farce and needs to change.

Given that technologically sophisticated privacy protection mechanisms require a fair bit of expertise (although we hope that they will become commoditized in a few years), one possible way forward is by introducing stronger acceptable-use agreements. Such agreements would dictate what the collector or recipient of the data can and cannot do with it. They should be combined with some form of informed consent, where users (or, in the health care context, patients) acknowledge their understanding that there is a re-identification risk. But the law needs to change to pave the way for this more enlightened approach.

Thanks to Vitaly Shmatikov for comments on a draft of this post.

To stay on top of future posts, subscribe to the RSS feed or follow me on Twitter.

The Secret Life of Data

Some people claim that re-identification attacks don’t matter, the reasoning being: “I’m not important enough for anyone to want to invest time on learning private facts about me.” At first sight that seems like a reasonable argument, at least in the context of the re-identification algorithms I have worked on, which require considerable human and machine effort to implement.

The argument is nonetheless fallacious, because re-identification typically doesn’t happen at the level of the individual. Rather, the investment of effort yields results over the entire database of millions of people (hence the emphasis on “large-scale” or “en masse”.) On the other hand, the harm that occurs from re-identification affects individuals. This asymmetry exists because the party interested in re-identifying you and the party carrying out the re-identification are not the same.

In today’s world, the entities most interested in acquiring and de-anonymizing large databases might be data aggregation companies like ChoicePoint that sell intelligence on individuals, whereas the party interested in using the re-identified information about you would be their clients/customers: law enforcement, an employer, an insurance company, or even a former friend out to slander you.

Data passes through multiple companies or entities before reaching its destination, making it hard to prove or even detect that it originated from a de-anonymized database. There are lots of companies known to sell “anonymized” customer data: for example Practice Fusion “subsidizes its free EMRs by selling de-identified data to insurance groups, clinical researchers and pharmaceutical companies.” On the other hand, companies carrying out data aggregation/de-anonymization are a lot more secretive about it.

Another piece of the puzzle is what happens when a company goes bankrupt. Decode genetics recently did, which is particularly interesting because they are sitting on a ton of genetic data. There are privacy assurances in place in their original Terms of Service with their customers, but will that bind the new owner of the assets? These are legal gray areas, and are frequently exploited by companies looking to acquire data.

At the recent FTC privacy roundtable, Scott Taylor of Hewlett Packard said his company regularly had the problem of not being able to determine where data is being shared downstream after the first point of contact. I’m sure the same is true of other companies as well. (How then could we possibly expect third-party oversight of this process?) Since data fuels the modern Web economy, I suspect that the process of moving data around will continue to become more common as well as more complex, with more steps in the chain. We could use a good name for it — “data laundering,” perhaps?

De-anonymization is not X: The Need for Re-identification Science

In an abstract sense, re-identifying a record in an anonymized collection using a piece of auxiliary information is nothing more than identifying which of N vectors best matches a given vector. As such, it is related to many well-studied problems from other areas of information science: the record linkage problem in statistics and census studies, the search problem in information retrieval, the classification problem in machine learning, and finally, biometric identification. Noticing inter-disciplinary connections is often very illuminating and sometimes leads to breakthroughs, but I fear that in the case of re-identification, these connections have done more harm than good.

Record linkage and k-anonymity. Sweeney‘s well-known experiment with health records was essentially an exercise in record linkage. The re-identification technique used was the simplest possible — a database JOIN. The unfortunate consequence was that for many years, the anonymization problem was overgeneralized based on that single experiment. In particular, it led to the development of two related and heavily flawed notions: k-anonymity and quasi-identifier.

The main problem with k-anonymity it is that it attempts avoid privacy breaches via purely syntactic manipulations to the data, without any model for reasoning about the ‘adversary’ or attacker. A future post will analyze the limitations of k-anonymity in more detail. ‘Quasi-identifier’ is a notion that arises from attempting to see some attributes (such as ZIP code) but not others (such as tastes and behavior) as contributing to re-identifiability. However, the major lesson from the re-identification papers of the last few years has been that any information at all about a person can be potentially used to aid re-identification.

Movie ratings and noise. Let’s move on to other connections that turned out to be red herrings. Prior to our Netflix paper, Frankowski et al. studied de-anonymization of users via movie ratings collected as part of the GroupLens research project. Their algorithm achieved some success, but failed when noise was added to the auxiliary information. I believe this to be because the authors modeled re-identification as a search problem (I have no way to know if that was their mental model, but the algorithms they came up with seem inspired by the search literature.)

What does it mean to view re-identification as a search problem? A user’s anonymized movie preference record is treated as the collection of words on a web page, and the auxiliary information (another record of movie preferences, from a different database) is treated as a list of search terms. The reason this approach fails is that in the movie context, users typically enter distinct, albeit overlapping, sets of information into different sites or sources. This leads to a great deal of ‘noise’ that the algorithm must deal with. While noise in web pages is of course an issue for web search, noise in the search terms themselves is not. That explains why search algorithms come up short when applied to re-identification.

The robustness against noise was the key distinguishing element that made the re-identification attack in the Netflix paper stand out from most previous work. Any re-identification attack that goes beyond Sweeney-style demographic attributes must incorporate this as a key feature. ‘Fuzzy’ matching is tricky, and there is no universal algorithm that can be used. Rather, it needs to be tailored to the type of dataset based on an understanding of human behavior.

Hope for authorship recognition. Now for my final example. I’m collaborating with other researchers, including John Bethencourt and Emil Stefanov, on some (currently exploratory) investigations into authorship recognition (see my post on De-anonymizing the Internet). We’ve been wondering why progress in existing papers seems to hit a wall at around 100 authors, and how we can break past this limit and carry out de-anonymization on a truly Internet scale. My conjecture is that most previous papers hit the wall because they framed authorship recognition as a classification problem, which is probably the right model for forensics applications. For breaking Internet anonymity, however, this model is not appropriate.

In a de-anonymization problem, if you only succeed for some fraction of the authors, but you do so in a verifiable way, i.e, your algorithm either says “Here is the identity of X” or “I am unable to de-anonymize X”, that’s great. In a classification problem, that’s not acceptable. Further, in de-anonymization, if we can reduce the set of candidate identities for X from a million to (say) 10, that’s fantastic. In a classification problem, that’s a 90% error rate.

These may seem like minor differences, but they radically affect the variety of features that we are able to use. We can throw in a whole lot of features that only work for some authors but not for others. This is why I believe that Internet-scale text de-anonymization is fundamentally possible, although it will only work for a subset of users that cannot be predicted beforehand.

Re-identification science. Paul Ohm refers to what I and other researchers do as “re-identification science.” While this is flattering, I don’t think we’ve done enough to deserve the badge. But we need to change that, because efforts to understand re-identification algorithms by reducing them to known paradigms have been unsuccessful, as I have shown in this post. Among other things, we need to better understand the theoretical limits of anonymization and to extract the common principles underlying the more complex re-identification techniques developed in recent years.

Thanks to Vitaly Shmatikov for reviewing an earlier draft of this post.

Oklahoma Abortion Law: Bloggers get it Wrong

The State of Oklahoma just passed legislation requiring that detailed information about every abortion performed in the state be submitted to the State Department of Health. Reports based on this data are to be made publicly available. The controversy around the law gained steam rapidly after bloggers revealed that even though names and addresses of mothers obtaining abortions were not collected, the women could nevertheless be re-identified from the published data based on a variety of other required attributes such as the date of abortion, age and race, county, etc.

As a computer scientist studying re-identification, this was brought to my attention. I was as indignant on hearing about it as the next smug Californian, and I promptly wrote up a blog post analyzing the serious risk of re-identification based on the answers to the 37 questions that each mother must anonymously report. Just before posting it, however, I decided to give the text of the law a more careful reading, and realized that the bloggers have been misinterpreting the law all along.

While it is true that the law requires submitting a detailed form to the Department of Health, the only information that is made public are annual reports with statistical tallies of the number of abortions performed under very broad categories, which presents a negligible to non-existent re-identification risk.

I’m not defending the law; that is outside my sphere of competence. There do appear to be other serious problems with it, outlined in a lawsuit aimed at stopping the law from going into effect. The text of this complaint, as Paul Ohm notes, does not raise the “public posting” claim. Besides, the wording of the law is very ambiguous, and I can certainly see why it might have been misinterpreted.

But I do want to lament the fact that bloggers and special interest groups can start a controversy based on a careless (or less often, deliberate) misunderstanding, and have it amplified by an emerging category of news outlets like the Huffington post, which have the credibility of blogs but a readership approaching traditional media. At this point the outrage becomes self-sustaining, and the factual inaccuracies become impossible to combat. I’m reminded of the affair of the gay sheep.

Your Morning Commute is Unique: On the Anonymity of Home/Work Location Pairs

Philippe Golle and Kurt Partridge of PARC have a cute paper (pdf) on the anonymity of geo-location data. They analyze data from the U.S. Census and show that for the average person, knowing their approximate home and work locations — to a block level — identifies them uniquely.

Philippe Golle and Kurt Partridge of PARC have a cute paper (pdf) on the anonymity of geo-location data. They analyze data from the U.S. Census and show that for the average person, knowing their approximate home and work locations — to a block level — identifies them uniquely.

Even if we look at the much coarser granularity of a census tract — tracts correspond roughly to ZIP codes; there are on average 1,500 people per census tract — for the average person, there are only around 20 other people who share the same home and work location. There’s more: 5% of people are uniquely identified by their home and work locations even if it is known only at the census tract level. One reason for this is that people who live and work in very different areas (say, different counties) are much more easily identifiable, as one might expect.

The paper is timely, because Location Based Services are proliferating rapidly. To understand the privacy threats, we need to ask the two usual questions:

- who has access to anonymized location data?

- how can they get access to auxiliary data linking people to location pairs, which they can then use to carry out re-identification?

The authors don’t say much about these questions, but that’s probably because there are too many possibilities to list! In this post I will examine a few.

GPS navigation. This is the most obvious application that comes to mind, and probably the most privacy-sensitive: there have been many controversies around tracking of vehicle movements, such as NYC cab drivers threatening to strike. The privacy goal is to keep the location trail of the user/vehicle unknown even to the service provider — unlike in the context of social networks, people often don’t even trust the service provider. There are several papers on anonymizing GPS-related queries, but there doesn’t seem to be much you can do to hide the origin and destination except via charmingly unrealistic cryptographic protocols.

GPS navigation. This is the most obvious application that comes to mind, and probably the most privacy-sensitive: there have been many controversies around tracking of vehicle movements, such as NYC cab drivers threatening to strike. The privacy goal is to keep the location trail of the user/vehicle unknown even to the service provider — unlike in the context of social networks, people often don’t even trust the service provider. There are several papers on anonymizing GPS-related queries, but there doesn’t seem to be much you can do to hide the origin and destination except via charmingly unrealistic cryptographic protocols.

The accuracy of GPS is a few tens or few hundreds of feet, which is the same order of magnitude as a city block. So your daily commute is pretty much unique. If you took a (GPS-enabled) cab home from work at a certain time, there’s a good chance the trip can be tied to you. If you made a detour to stop somewhere, the location of your stop can probably be determined. This is true even if there is no record tying you to a specific vehicle.

Location based social networking. Pretty soon, every smartphone will be capable of running applications that transmit location data to web services. Google Latitude and Loopt are two of the major players in this space, providing some very nifty social networking functionality on top of location awareness. It is quite tempting for service providers to outsource research/data-mining by sharing de-identified data. I don’t know if anything of the sort is being done yet, but I think it is clear that de-identification would offer very little privacy protection in this context. If a pair of locations is uniquely identifying, a trail is emphatically so.

Location based social networking. Pretty soon, every smartphone will be capable of running applications that transmit location data to web services. Google Latitude and Loopt are two of the major players in this space, providing some very nifty social networking functionality on top of location awareness. It is quite tempting for service providers to outsource research/data-mining by sharing de-identified data. I don’t know if anything of the sort is being done yet, but I think it is clear that de-identification would offer very little privacy protection in this context. If a pair of locations is uniquely identifying, a trail is emphatically so.

The same threat also applies to data being subpoena’d, so data retention policies need to take into consideration the uselessness of anonymizing location data.

I don’t know if cellular carriers themselves collect a location trail from phones as a matter of course. Any idea?

Plain old web browsing. Every website worth the name identifies you with a cookie, whether you log in or not. So if you browse the web from a laptop or mobile phone from both home and work, your home and work IP addresses can be tied together based on the cookie. There are a number of free or paid databases for turning IP addresses into geographical locations. These are generally accurate up to the city level, but beyond that the accuracy is shaky.

A more accurate location fix can be obtained by IDing WiFi access points. This is a curious technological marvel that is not widely known. Skyhook, Inc. has spent years wardriving the country (and abroad) to map out the MAC addresses of wireless routers. Given the MAC address of an access point, their database can tell you where it is located. There are browser add-ons that query Skyhook’s database and determine the user’s current location. Note that you don’t have to be browsing wirelessly — all you need is at least one WiFi access point within range. This information can then be transmitted to websites which can provide location-based functionality; Opera, in particular, has teamed up with Skyhook and is “looking forward to a future where geolocation data is as assumed part of the browsing experience.” The protocol by which the browser communicates geolocation to the website is being standardized by the W3C.

The good news from the privacy standpoint is that the accurate geolocation technologies like the Skyhook plug-in (and a competing offering that is part of Google Gears) require user consent. However, I anticipate that once the plug-ins become common, websites will entice users to enable access by (correctly) pointing out that their location can only be determined to within a few hundred meters, and users will leave themselves vulnerable to inference attacks that make use of location pairs rather than individual locations.

Image metadata. An increasing number of cameras these days have (GPS-based) geotagging built-in and enabled by default. Even more awesome is the Eye-Fi card, which automatically uploads pictures you snap to Flickr (or any of dozens of other image sharing websites you can pick from) by connecting to available WiFi access points nearby. Some versions of the card do automatic geotagging in addition.

Image metadata. An increasing number of cameras these days have (GPS-based) geotagging built-in and enabled by default. Even more awesome is the Eye-Fi card, which automatically uploads pictures you snap to Flickr (or any of dozens of other image sharing websites you can pick from) by connecting to available WiFi access points nearby. Some versions of the card do automatic geotagging in addition.

If you regularly post pseudonymously to (say) Flickr, then the geolocations of your pictures will probably reveal prominent clusters around the places you frequent, including your home and work. This can be combined with auxiliary data to tie the pictures to your identity.

Now let us turn to the other major question: what are the sources of auxiliary data that might link location pairs to identities? The easiest approach is probably to buy data from Acxiom, or another provider of direct-marketing address lists. Knowing approximate home and work locations, all that the attacker needs to do is to obtain data corresponding to both neighborhoods and do a “join,” i.e, find the (hopefully) unique common individual. This should be easy with Axciom, which lets you filter the list by “DMA code, census tract, state, MSA code, congressional district, census block group, county, ZIP code, ZIP range, radius, multi-location radius, carrier route, CBSA (whatever that is), area code, and phone prefix.”

Google and Facebook also know my home and work addresses, because I gave them that information. I expect that other major social networking sites also have such information on tens of millions of users. When one of these sites is the adversary — such as when you’re trying to browse anonymously — the adversary already has access to the auxiliary data. Google’s power in this context is amplified by the fact that they own DoubleClick, which lets them tie together your browsing activity on any number of different websites that are tracked by DoubleClick cookies.

Finally, while I’ve talked about image data being the target of de-anonymization, it may equally well be used as the auxiliary information that links a location pair to an identity — a non-anonymous Flickr account with sufficiently many geotagged photos probably reveals an identifiable user’s home and work locations. (Some attack techniques that I describe on this blog, such as crawling image metadata from Flickr to reveal people’s home and work locations, are computationally expensive to carry out on a large scale but not algorithmically hard; such attacks, as can be expected, will rapidly become more feasible with time.)

Summary. A number of devices in our daily lives transmit our physical location to service providers whom we don’t necessarily trust, and who keep might keep this data around or transmit it to third parties we don’t know about. The average user simply doesn’t have the patience to analyze and understand the privacy implications, making anonymity a misleadingly simple way to assuage their concerns. Unfortunately, anonymity breaks down very quickly when more than one location is associated with a person, as is usually the case.

Summary. A number of devices in our daily lives transmit our physical location to service providers whom we don’t necessarily trust, and who keep might keep this data around or transmit it to third parties we don’t know about. The average user simply doesn’t have the patience to analyze and understand the privacy implications, making anonymity a misleadingly simple way to assuage their concerns. Unfortunately, anonymity breaks down very quickly when more than one location is associated with a person, as is usually the case.

De-anonymizing Social Networks

Our social networks paper is finally officially out! It will be appearing at this year’s IEEE S&P (Oakland).

Please read the FAQ about the paper.

Abstract:

Operators of online social networks are increasingly sharing potentially sensitive information about users and their relationships with advertisers, application developers, and data-mining researchers. Privacy is typically protected by anonymization, i.e., removing names, addresses, etc.

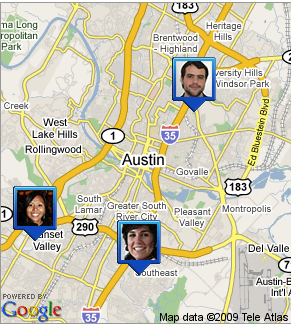

We present a framework for analyzing privacy and anonymity in social networks and develop a new re-identification algorithm targeting anonymized social-network graphs. To demonstrate its effectiveness on real-world networks, we show that a third of the users who can be verified to have accounts on both Twitter, a popular microblogging service, and Flickr, an online photo-sharing site, can be re-identified in the anonymous Twitter graph with only a 12% error rate.

Our de-anonymization algorithm is based purely on the network topology, does not require creation of a large number of dummy “sybil” nodes, is robust to noise and all existing defenses, and works even when the overlap between the target network and the adversary’s auxiliary information is small.

The HTML version was produced using my Project Luther software, which in my opinion produces much prettier output than anything else (especially math formulas). Another big benefit is the handling of citations: it automatically searches various bibliographic databases and adds abstract/bibtex/download links and even finds and adds links to author homepages in the bib entries.

I have never formally announced or released Luther; it needs more work before it can be generally usable, and my time is limited. Drop me a line if you’re interested in using it.