Posts tagged ‘web security’

Yet Another Identity Stealing Bug. Will Creeping Normalcy be the Result?

Elie Bursztein points me to a “Cross Site URL Hijacking” attack which, among other things, allows a website to identify a visitor instantly (if they are using Firefox) by finding their Google and possibly Facebook IDs. Here is a live demo and here’s a paper.

For the security geeks, the attack works by exploiting a Firefox bug that allows a page in the attacker domain to infer URLs of pages in the target domain. If a page like target.com/home redirects to target.com/?user=[username] (which is quite common), the attacker can learn the username by requesting the page target.com/home in a script tag.

Let us put this attack in context. Stealing the identity of a web visitor should be familiar to readers of this blog. I’ve recently written about doing this via history stealing, then a bug in Google spreadsheets, and now we have this. While the spreadsheets bug was fixed, the history stealing vulnerability remains in most browsers. Will new bugs be found faster than existing ones getting fixed? The answer is probably yes.

Something that is of much more concern in the long run is Facebook’s instant personalization, which is basically like identity stealing, except it is a feature rather than a bug. Currently Facebook identities are available without user consent to only 3 partners (Yelp, Pandora and docs.com) but there will be inevitable competitive pressures both for Facebook to open this up to more websites as well as for other identity providers to offer a similar service.

Legitimate methods and hacks based on bugs are not entirely distinct. Two XSS attacks on yelp.com were found in quick succession either of which could have been exploited by a third (fourth?) party for identity stealing. Instant personalization (and similar attempts at an “identity layer”) greatly increase the chance of bugs that leak your identity to every website, authorized or not.

As identity-stealing bugs as well as identity-sharing features proliferate, the result is going to be creeping normalcy — users will get slowly inured to the idea that any website they visit might have their identity. And that will be a profound change for the way the web works. Of course, savvy users will know how to turn off the various tracking mechanisms, but most people will be left in the lurch.

We are still at the early stages of this shift. It is clear that it will have both good and ill effects. For example, people are much more civil when interacting under their real-life identity. For this reason, there is quite a clamor for identity. For instance, see News Sites Rethink Anonymous Online Comments and The Forces Align Against Anonymity. But like every change, this one is going to be hard to get used to.

To stay on top of future posts, subscribe to the RSS feed or follow me on Twitter.

Google Docs Identity Leak Bug Fixed

Yesterday I wrote about a bug in Google Docs that lets an arbitrary website find your identity. This morning I woke up to this piece of good news in my Inbox:

The fix is pushed out and live for all users as of the middle of last night. Basically we only show the username of collaborators if they are explicitly listed on the ACL of the spreadsheet. Otherwise we call them “Anonymous user”. This means that an editor of the document had to already know the username in order for that username to be visible to collaborators.

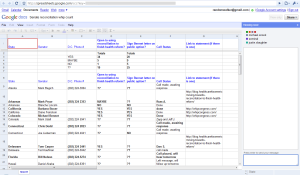

I can confirm that the demo page no longer finds my identity. And the spreadsheet in my last post now looks like this:

The Google Docs help question “Collaborating: Why are some users anonymous?” explains:

If a document is set by the owner to be viewable or editable by everyone, then Google Docs does not show the names of those who choose to view or edit the document. Google Docs displays only the identities of users who are explicitly given permission to view or edit a document (either individually or as part of a group).

You might wonder what happens if the attacker explicitly gives permission to a whole bunch of users (say using scraped email addresses) . There seems to be an extra level of protection now:

Sounds like a happy resolution.

To stay on top of future posts, subscribe to the RSS feed or follow me on Twitter.

How Google Docs Leaks Your Identity

Recap. In the previous two articles in this Ubercookies series, I showed how an arbitrary website that you visit can learn your identity using the “history stealing” bug in web browsers. In this article I will show how a bug in Google Docs gives any website the same capability in a far easier manner.

Update. A Google Docs team member tells me that a fix should be live later today.

Update 2. Now fixed.

About six weeks ago I discovered that a feature/bug in Google docs can be used to mass harvest e-mail addresses. I noted it in my journal, but soon afterwards I realized that it was much worse: you could actually discover the identity of web visitors using the bug. Recently, Vincent Toubiana and I implemented the attack; here is a video of the demo webpage (on my domain, in no way related to Google) just to show that we got it working.

(You might need to hit pause to read the text.)

I’m not releasing the live demo, since the vulnerability unfortunately still exists (more on this below). Let us now study the attack in more detail.

Bug or feature? Google Spreadsheets has a feature that tells you who else is editing the document. It’s actually really nifty: you can see in real time who is editing which cell, and it even seems to have live chat. The problem is that this feature is available even for publicly viewable documents. Do you see where this is going?

First of all, this is a problem even without the surreptitious use I’m going to describe. Here’s a public spreadsheet I found with 10 seconds of Googling that a few people seem to be viewing when I looked. I’m not sure the author of this document intended it to be publicly viewable or editable.

The attack works by embedding an invisible iframe (dimensions 0x0) into the malicious web page. The iframe loads a public spreadsheet that the attacker has already created. In a separate backend process, the attacker constantly checks the list of people viewing the spreadsheet and records this information. After the iframe is embedded, the Javascript on the page page waits a second or two and queries the attacker’s server to get the username of the user who most recently appeared on the list.

What if multiple people are visiting the page at roughly the same time? It’s not a problem, for two reasons: 1. Google Spreadsheets has a “push” notification system for updating the frontend which enables the attacker to get the identity of the new user virtually instantaneously. 2. To further increase accuracy, the attacker can create (say) 10 spreadsheets and embed a random subset of 5 into any given visitor’s page, making it exceptionally unlikely that there will be a collision.

The only inefficient part of the attack as Toubiana and I have implemented it is that it requires a browser (with a GUI) to be open to monitor the spreadsheet. Browser rendering engines have been modularized into scriptable components, so with a little more effort it should be possible to run this without a display. At present I have it running out of an old laptop tucked away in my dresser :-)

Defense. How can Google fix this bug? There are stop-gap measures, but as far as I can see the only real solution is to disable the collaborator list for public documents. Again a trade-off between functionality and privacy as we saw in the previous article.

Many people responded to my original post saying they were going to stay logged out of Google when they didn’t need to be logged in (since you can’t log out of just Google Docs separately). Unfortunately, that’s not a feasible solution for me, and I suspect many other people. There are at least 3 Google services that I constantly need to keep tabs on; otherwise my entire workflow would come to a screeching halt. So I just have to wait for Google to do something about this bug. Which brings me to my next point:

Great power, great responsibility. There is a huge commercial benefit to becoming an identity provider. As Michael Arrington has repeatedly noted, many Internet companies issue OpenIDs but don’t accept them from other providers, in a race to “own the identity” of as many users as possible. That is of course business as usual, but the players in this race need to wake up to the fact that being an identity provider is asking users for a great deal of trust, whether or not users realize it.

An identity-stealing bug is an (unintentional) violation of that trust because — among many other reasons — it is a precursor to stealing your actual account credentials. (That is particularly scary with Google due to their lack of anything resembling customer service for account issues.) One strategy for stealing account credentials is a phishing page mimicking the Google login page, with your username filled in. Users are much less likely to be suspicious and more likely to respond to messages that have their name on them. Research on social phishing reaches similar conclusions.

I’ve been in contact with people at Google about this bug and I’ve been told a fix is being worked on, specifically that “less presence information will be revealed.” I take it to mean the attack described here won’t work. Since they are making a good-faith effort to fix it, I’m not releasing the demo itself. It has been a long time, though. The Buzz privacy issues were fixed in 4 days, and that kind of urgency is necessary for security issues of this magnitude.

A kind of request forgery. The attack here can be seen as a simpleminded cross-site request forgery. In general, any type of request forgery bug that causes your browser to initiate a publicly recorded interaction on your behalf will immediately leak you identity. For example, if (hypothetically) visiting a URL causes your browser to leave a comment on a specific Youtube video, then the attacker can create a Youtube video and constantly monitor it for comments, mirroring the attack technique used here.

Another technical lesson from this bug is that access control in social networking can be tricky. I’ve written before that privacy in social networking is about a lot more than access control, and that theory doesn’t help determine user reactions to your product. But this bug was an access control issue, and theory would have helped. Websites designing social features would do well to have someone with an academic background thinking about security issues.

Up next. In this post as well as the previous ones, I’ve briefly hinted at what exactly can go wrong if websites can learn your identity. The next post in this series will examine that issue in more detail. Stay tuned — it turns out there’s quite a bit more to say about that, and you might be surprised.

Thanks to Vincent Toubiana for reviewing a draft.

To stay on top of future posts, subscribe to the RSS feed or follow me on Twitter.